You are on AWS, Azure, or Google’s Cloud. But are you Transforming on the Cloud? | Blog

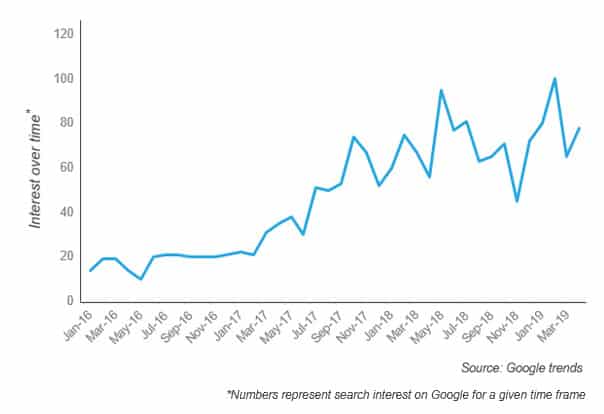

There is no questioning the ubiquity of cloud delivery models, independent of whether they’re private, public, or hybrid. It has become a crucial technology delivery model across enterprises, and you would be hard pressed to find an enterprise that has not adopted at least some sort of cloud service.

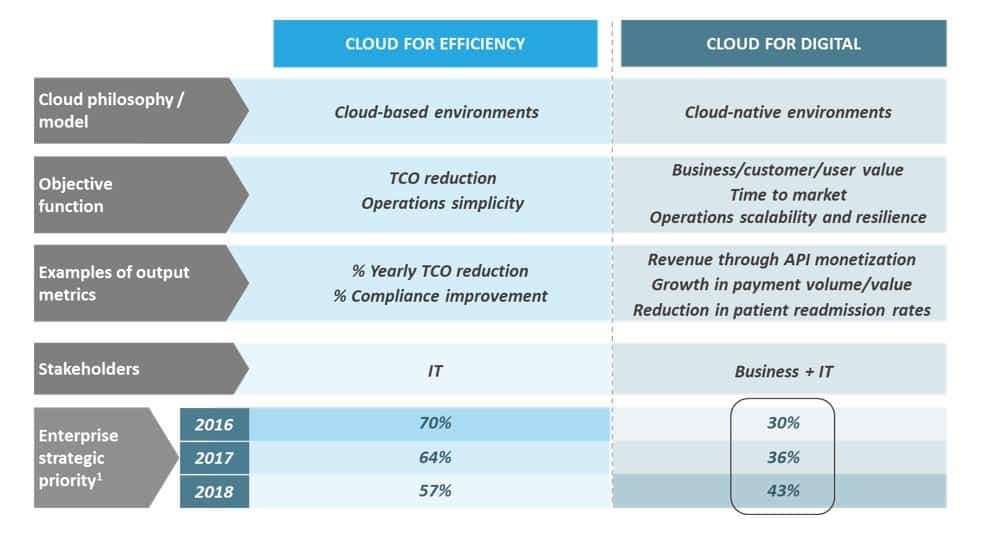

However, adopting the cloud and leveraging it to transform the business are very different. In the Cloud 1.0 and Cloud 2.0 waves, most enterprises started their adoption journey through workload lift and shifts. They reduced their Capex and Opex spend by 30-40 percent over the years. Enamored with these savings and believing their job was done, many stopped there. True that the complexity of the lifted and shifted workload increased when they moved from Cloud 1.0 to Cloud 2.0, e.g., from web portal to collaboration platforms to even ERP systems. But, it was still lift and shift, with minor refactoring.

This fact demonstrates that most enterprises are, unfortunately, treating the cloud as just another hosting model, rather than a transformative platform.

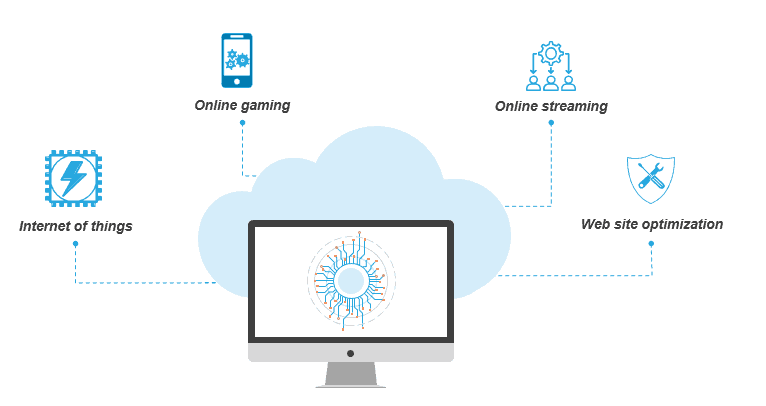

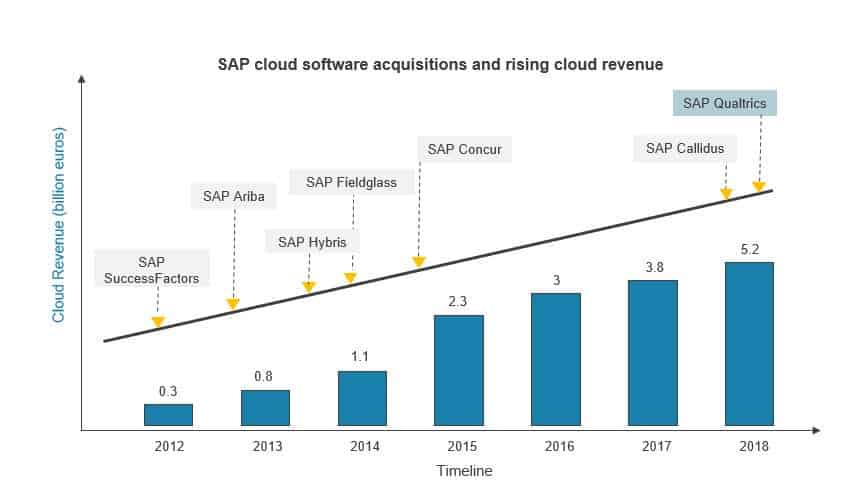

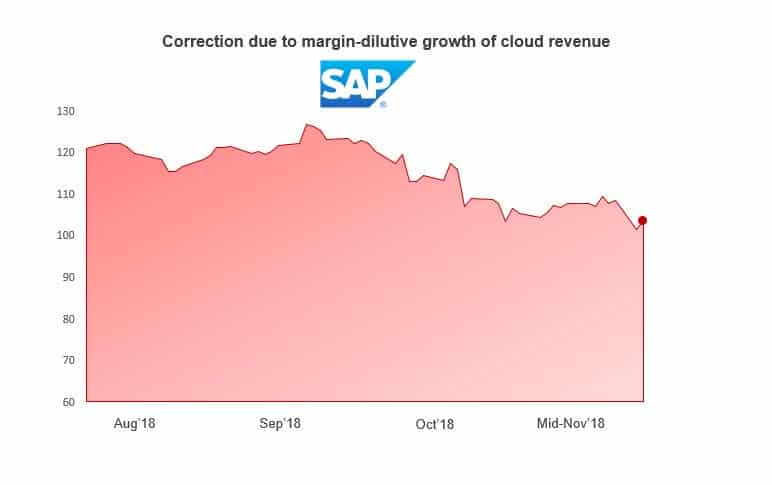

Yet, a few forward-thinking enterprises are now challenging this status quo for the Cloud 3.0 wave. They plan to leverage the cloud as a transformative model where native services can be built in to not only modernize the existing technology landscape but also for cloud-based analytics, IoT-centric solutions, advanced architecture, and very heavy workloads. The main difference with these workloads is that they won’t just “reside” on cloud; they will use the fundamental capabilities of the cloud model for perpetual transformation.

So, what does your enterprise need to do to follow their lead?

Of course, you need to start by building the business case for transformation. Once that is done, and you’ve taken care of the change management aspects, here are the three key technology-centric steps you need to follow:

Redo workloads on the cloud

Many monolith applications, like data warehouses and sales applications, have already been ported to a cloud model. You need to break the ones you use down based on their importance and the extent of debt in terms of the transformation needed. Many components may be taken out of the existing cloud and ported in-house or to other cloud platforms based on the value they can deliver and their architectural complexity. Some components can leverage cloud-based functionalities (e.g., for data analytics) and drive further customer value. You need to think about extending the functionality of these existing workloads to leverage newer cloud platform features such as IoT-based data gathering and advanced authentication.

Revisit new builds on the cloud

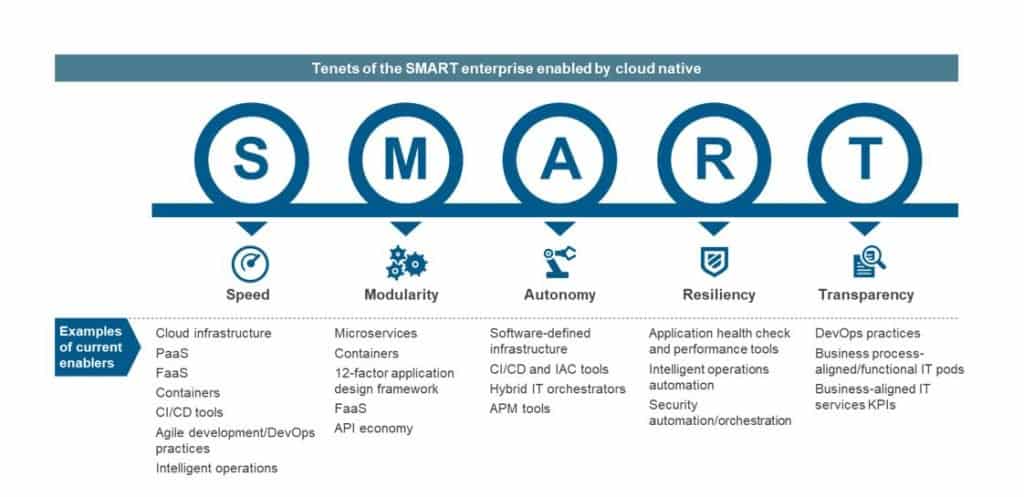

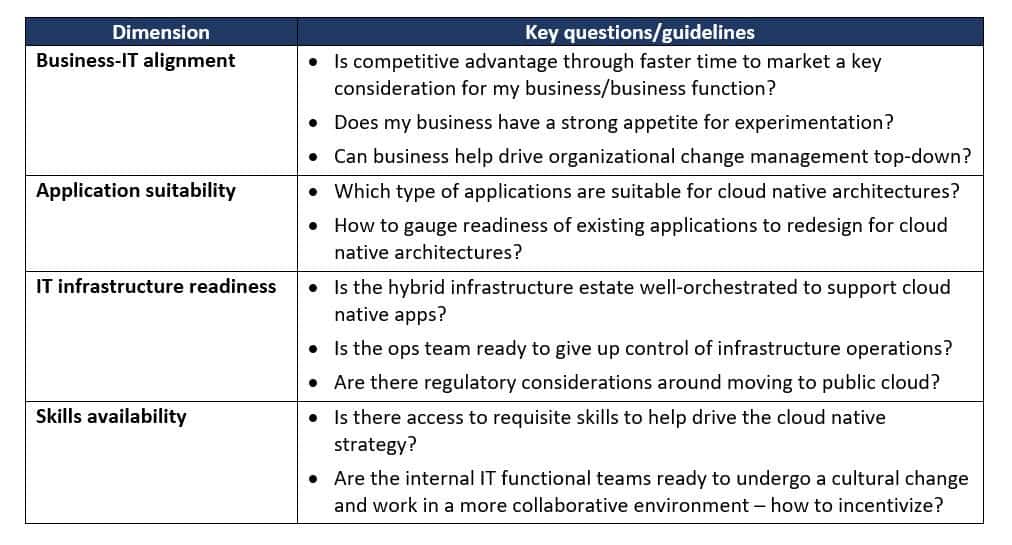

Our research suggests that only 27 percent of today’s enterprises are meaningfully building and deploying cloud-native workloads. This includes workloads with self-scaling, tuning, replication, back-up, high availability, and cloud-based API integration. You must proactively assess whether your enterprise needs cloud-native architectures to build out newer solutions. Of course, cloud native does not mean every module should leverage the cloud platform. But a healthy dose of the workload should have some elements of cloud adoption.

Relook development and IT operations on the cloud

Many enterprises overlook this part, as they believe the cloud’s inherent efficiency is enough to transform their operating model. Unfortunately, it does not work that way. For cloud-hosted or cloud-based development, you need to relook at your enterprise’s code pipelines, integrations, security, and various other aspects around IT operations. The best practices of the on-premise era continue to be relevant, albeit in a different model, such as tweaks to the established ITSM model). Your developers need to get comfortable with leveraging abstract APIs, rather than worrying about what is under the hood.

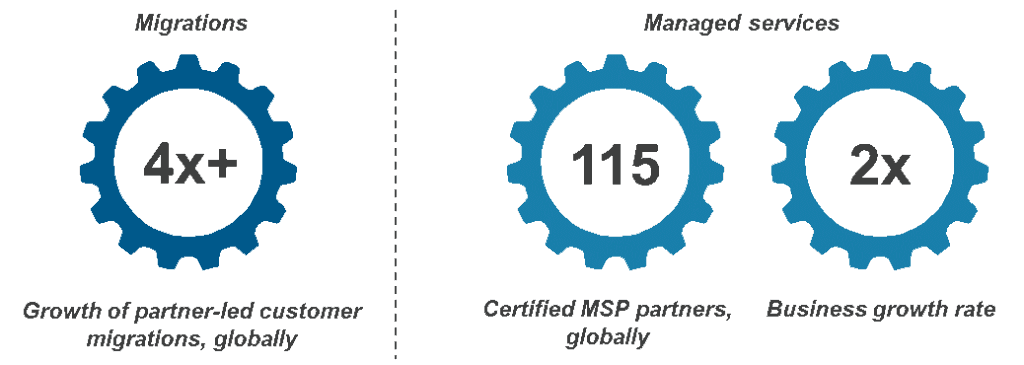

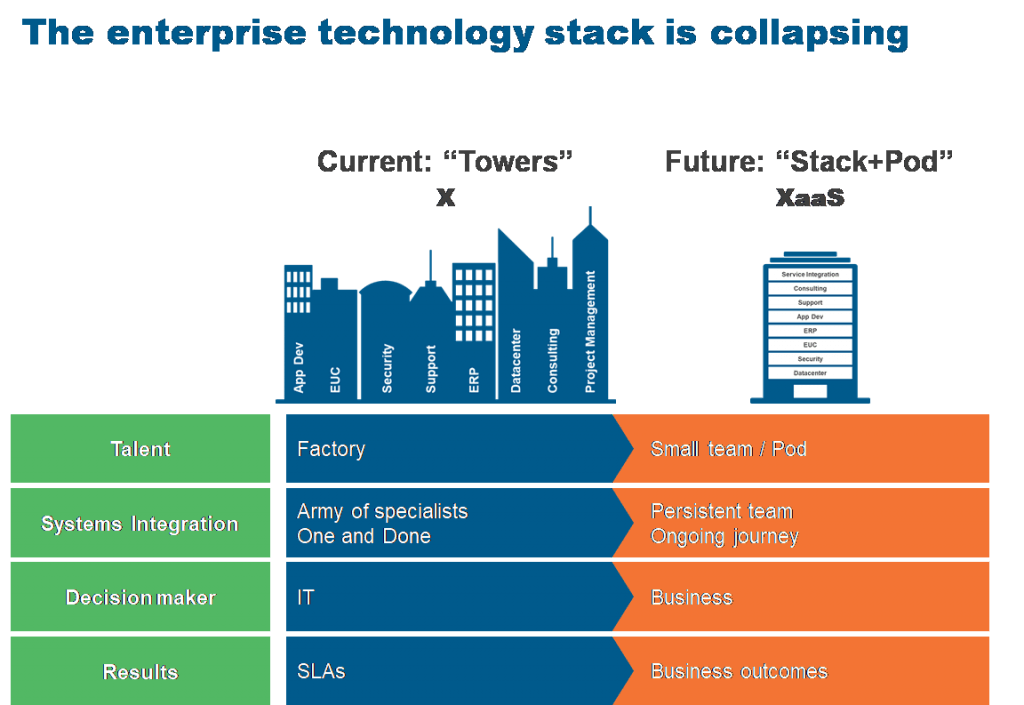

The Cloud 3.0 wave needs to leverage the cloud as a transformation platform instead of just another hosting model. Many enterprises limit their cloud journey to migration and transition. This needs to change going forward. Enterprises will also have to decide whether they will ever be able to build so many native services in their private cloud. The answer is probably not. Therefore, the strategic decision of leveraging hybrid models will become even more important. The service partners will also need to enhance their offerings beyond migration, transformation during migration, and management. They need to drive continuous evolution of workloads once ported or built on the cloud.

Remember, the cloud itself is not magic. What makes it magical is the additional transformation you can derive beyond the cloud platform’s core capabilities.

What has been your experience in adopting cloud services? Please write to me at [email protected].