Sustainable Business Needs Sustainable Technology: Can Cloud Modernization Help? | Blog

Estimates suggest that enterprise technology accounts for 3-5% of global power consumption and 1-2% of carbon emissions. Although technology systems are becoming more power efficient, optimizing power consumption is a key priority for enterprises to reduce their carbon footprints and build sustainable businesses. Cloud modernization can play an effective part in this journey if done right.

Current practices are not aligned to sustainable technology

The way systems are designed, built, and run impacts enterprises’ electricity consumption and CO2 emissions. Let’s take a look at the three big segments:

- Architecture: IT workloads, by design, are built for failover and recovery. Though businesses need these backups in case the main systems go down, the duplication results in significant electricity consumption. Most IT systems were built for the “age of deficiency,” wherein underlying infrastructure assets were costly, rationed, and difficult to provision. Every major system has a massive back-up to counter failure events, essentially multiplying electricity consumption.

- Build: Consider that for each large ERP production system there are 6 to 10 non-production systems across development, testing, and staging. Developers, QA, security, and pre-production ended up building their own environments. Yet, whenever systems were built, the entire infrastructure needed to be configured despite the team needing only 10-20% of it. Thus, most of the electricity consumption ended up powering capacity that wasn’t needed at all.

- Run: Operations teams have to make do with what the upstream teams have given them. They can’t take down systems to save power on their own as the systems weren’t designed to work that way. So, the run teams ensure every IT system is up and running. Their KPIs are tied to availability and uptime, meaning that they were incentivized to make systems “over available” even when they weren’t being used. The run teams didn’t – and still don’t – have real-time insights into the operational KPIs of their systems landscape to dynamically decide which systems to shut off to save power consumption.

The role of cloud modernization in building a sustainable technology ecosystem

In February 2020, an article published in the journal Science suggested that, despite digital services from large data center and cloud vendors growing sixfold between 2010 and 2018, energy consumption grew by only 6%. I discussed power consumption as an important element of “Ethical Cloud” in a blog I wrote earlier this year.

Many cynics say that cloud just shifts power consumption from the enterprise to the cloud vendor. There’s a grain of truth to that. But I’m addressing a different aspect of cloud: using cloud services to modernize the technology environment and envision newer practices to create a sustainable technology landscape, regardless of whether the cloud services are vendor-delivered or client-owned.

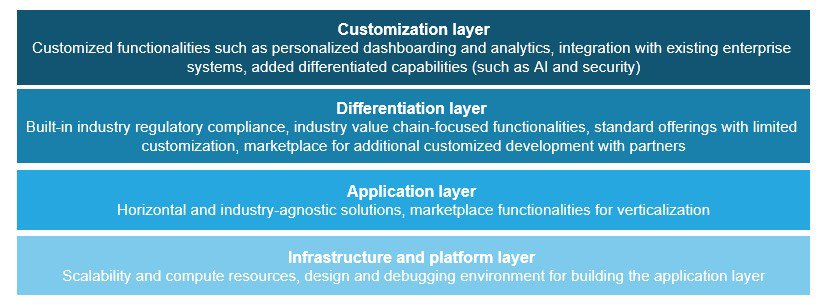

Cloud 1.0 and 2.0: By now, many architects have used cloud’s run time access of underlying infrastructure, which can definitely address the issues around over-provisioning. Virtual servers on the cloud can be switched on or off as needed, and doing so reduces carbon emission. Moreover, as cloud instances can be provisioned quickly, they are – by design – fault tolerant, so they don’t rely on excessive back-up systems. They can be designed to go down, and their back-up turns on immediately without being forever online. The development, test, and operations teams can provision infrastructure as and when needed. And they can shut it down when their work is completed.

Cloud 3.0: In the next wave of cloud services, with enabling technologies such as containers, functions, and event-driven applications, enterprises can amplify their sustainable technology initiatives. Enterprise architects will design workloads keeping failure as an essential element that needs to be tackled through orchestration of run time cloud resources, instead of relying on traditional failover methods that promote over consumption. They can modernize existing workloads that need “always on” infrastructure and underlying services to an event-driven model. The application code and infrastructure lay idle and come online only when needed. A while back I wrote a blog that talks about how AI can be used to compose an application at run time instead of always being available.

Server virtualization played an important role in reducing power consumption. However, now, by using containers, which are significantly more efficient than virtual machines, enterprises can further reduce their power consumption and carbon emissions. Though cloud sprawl is stretching the operations teams, newer automated monitoring tools are becoming effective in providing a real-time view of the technology landscape. This view helps them optimize asset uptime. They can also build infrastructure code within development to make an application aware of when it can let go of IT assets and kill zombie instances, which enables the operations team to focus on automating and optimizing, instead of managing systems that are always on.

Moreover, because the entire cloud migration process is getting optimized and automated, power consumption is further reduced. Newer cloud-native workloads are being built in the above model. However, enterprises have large legacy technology landscapes that need to move to an on-demand cloud-led model if they are serious about their sustainability initiatives. Though the business case for legacy modernization does consider power consumption, it mostly focuses on movement of workload from on-premises to the cloud. And it doesn’t usually consider architectural changes that can reduce power consumption, even if it’s a client-owned cloud platform.

When considering next-generation cloud services, enterprises should rethink their modernization journeys beyond a data center exit to building a sustainable technology landscape. They should consider leveraging cloud-led enabling technologies to fundamentally change the way their workloads are architected, built, and run. However, enterprises can only think of building a sustainable business through sustainable technology when they’ve adopted cloud modernization as a potent force to reduce power and carbon emission.

This is a complex topic to solve for, but we all have to start somewhere. And there are certainly other options to consider, like greater reliance on renewable energy, reduction in travel, etc. I’d love to hear what you’re doing, whether it’s using cloud modernization to reduce carbon emission, just shifting your emissions to a cloud vendor, or another approach. Please write to me at [email protected].