Modern enterprises rely on data as the backbone of digital, analytics, and generative AI (gen AI) capabilities. But rising consumption, complex multi-cloud pipelines, and proliferating stakeholders are directly affecting reliability and costs.

Data observability acts as the operating layer that gives teams continuous visibility into data health, pipeline performance, compliance, and spending. It also closes the loop with anomaly detection, triage, and auto-recommendations.

Everest Group’s latest PEAK Matrix® Assessment on Data Observability Technology Providers highlights this shift. Adoption is accelerating, buyer priorities are sharpening, and the value chain is broadening to include cost management and Artificial Intelligence (AI) / Machine Learning (ML) augmentation.

Reach out to discuss this topic in depth.

Understanding data observability

At its core, data observability brings transparency and trust to the enterprise data ecosystems. It ensures that data is healthy, reliable, compliant, and cost-efficient. Unlike standalone quality initiatives that focus on dataset accuracy and consistency, data observability monitors health and flow across pipelines. It proactively detects and resolves issues such as errors or delays before they affect downstream processes.

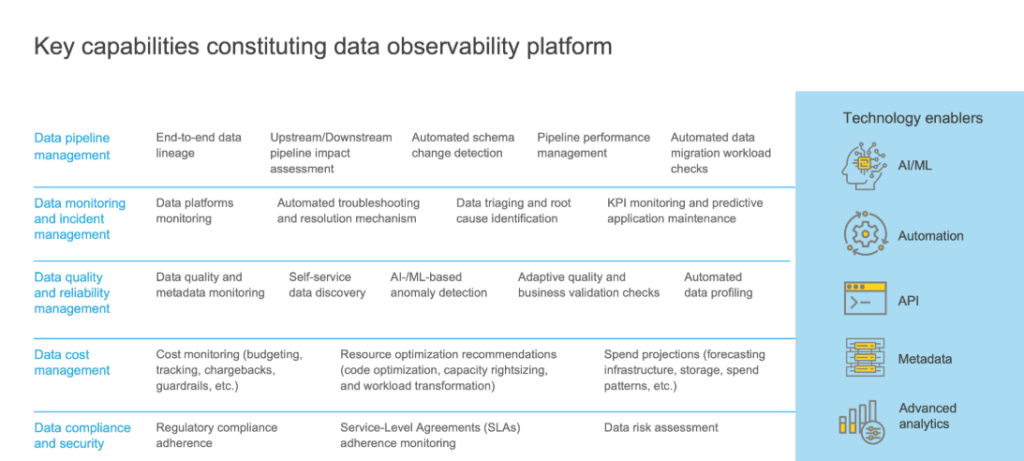

A useful way to understand data observability is through the value chain comprising five core modules, supported by enabling technologies such as AI/ML, automation, Application Programming Interfaces (APIs), metadata, and advanced analytics, as highlighted in the below exhibit:

Exhibit 1: Key capabilities constituting data observability platform

- Data pipeline management: Provides visibility into lineage and dependencies, reducing risk during migrations and architecture changes

- Data monitoring and incident management: Shifts operations from reactive firefighting to proactive prevention, minimizing downtime

- Data quality and reliability management: Keeps data accurate, consistent, and usable by detecting anomalies and validating against business rules, ensuring dashboards, reports, and data models operate with trustworthy inputs

- Data cost management: Embeds cost guardrails and optimization insights, connecting data reliability with financial accountability

- Data compliance and security: Integrates compliance checks into operations, lowering audit costs and reducing regulatory risk

Together, these modules elevate data observability from a monitoring tool to a trust layer that supports digital transformation, data-driven decision-making, and AI adoption.

Enterprise challenges and best practices

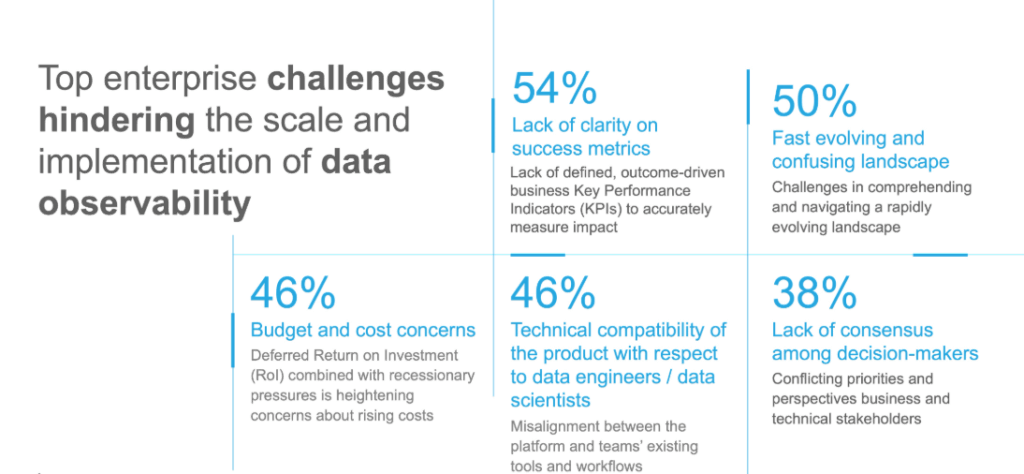

Scaling data observability is not without obstacles. Unclear success metrics, cost pressures, and security concerns often stall progress. Exhibit 2 below highlights the top challenges enterprises face when implementing data observability at scale.

Exhibit 2: Top enterprise challenges hindering the scale and implementation of data observability

However, each challenge has practical solution when observability is treated as a capability, rather than just a tool or project:

- Unclear success metrics: Enterprises often track technical signals instead of business outcomes. Key Performance Indicators (KPIs) should be reframed in business terms, such as freshness tied to Service Level Agreements (SLAs) or anomaly detection linked to revenue leakage. This makes data observability measurable in the language of the business

- Fast evolving provider landscape: The market is crowded. Using the value chain as a reference helps identify overlaps, gaps, and integration points rather than comparing features one by one

- Budget and cost concerns: Deploying observability everywhere at once drives costs up. Instead, enterprises should prioritize high-value, high-risk data assets and link observability to FinOps, turning cost visibility into cost control

- Unclear scope: Treating observability as a project creates confusion. Defining it in terms of data products or business domains helps clarify scope

- Security and privacy concerns: Enterprises need platforms that adapt to risk profiles. Platforms must support observability that travels with the data, ensuring privacy across environments

Enterprises that address these challenges with intent can build observability into a measurable and scalable capability.

Market demand themes and what’s changing

Data observability is evolving into a strategic necessity, driven by three major shifts:

- Rising consumption and complexity: Nearly two-thirds of enterprises report growth in dashboards and assets and highlight rising complexity in data journeys as a key driver for data observability adoption. Rising volumes of semi-structured and unstructured data, expanding data sources, and the push to scale AI/ML all strengthen the case for data observability

- Maturing buyer priorities: Buyers now demand deep integration, transparent pricing, flexible hosting, and strong security. The market has shifted from experimentation to enterprise-grade readiness

- Increasing governance, compliance, and regulatory pressures: Enterprises face rising requirements around data governance, compliance, and cross-border regulations. Data observability helps enterprises manage compliance proactively, reduce audit overheads, and build greater confidence with regulators and customers

For leaders, the implication is simple, plan for expanded coverage, insist on interoperability, and prepare for convergence with governance and catalogs over time.

The way forward

The next phase of data observability will be shaped by AI adoption, cloud economics, and federated ownership. Several shifts are already underway:

- AI pipelines come into scope: Observability is extending into ML and Large Language Model (LLM) workflows, monitoring data from ingestion to model outputs. Early anomaly detection prevents bad data from propagating through models

- Agents enter the loop: Autonomous assistants will handle routine fixes, such as schema changes or data backfills, escalating only exceptions and freeing teams for higher-value work

- Self-service becomes standard: Monitors, lineage views, and SLA templates will be embedded in data products, empowering domain teams and reducing reliance on central data engineering

- FinOps demand rises: Automated guardrails will enforce budgets, flag costly queries, and optimize workloads in real time, balancing data reliability with financial accountability

- Shift from detection to auto-remediation: Platforms will progress from anomaly detection and recommendations to auto-remediation, where systems rerun pipelines, adjust schemas, or backfill data automatically, with human oversight ensuring alignment to business priorities

- Coverage expands: Observability will extend beyond tables to logs, documents, Internet of Things (IoT) streams, and unstructured formats, eliminating blind spots and ensure reliable outcomes for analytics and AI

- Convergence accelerates: Observability, governance, and catalogs will increasingly integrate, forming a unified trust and observability stack

Together, these shifts point to a future where data observability evolves into an intelligent, proactive, cost-aware, and AI-ready enterprise capability.

Implications for enterprise leaders

For enterprises, data observability is no longer optional, it is foundational. It can no longer be relegated to engineering teams, it must be part of data strategy, governance, and risk management. To capture its full value:

- Use the value chain as your blueprint: Align modules with top risks and priorities, ensuring deployment where it delivers the greatest impact, i.e. safeguarding mission-critical pipelines, reducing downtime, or protecting compliance. This structured approach prevents tool solutions and builds observability into a disciplined capability

- Balance find-and-fix with assure-and-optimize: Go beyond detection to embed compliance and FinOps guardrails. This dual focus strengthens uptime, cost control, and regulatory adherence while enhancing trust

- Source for the road ahead: Choose platforms that integrate broadly across the data stack and provide credible roadmaps for AI observability, auto-remediation, and convergence. Futureproof sourcing ensures investments remain relevant as AI adoption, compliance demands, and integration needs expand

By following these imperatives, enterprises can position data observability as a strategic capability that underpins reliable digital operations, trustworthy analytics, and scalable AI adoption.

If you enjoyed this blog, check out our Technology And Digital Leaders (Digital & Next-gen) – Everest Group page, which delves deeper into other topics regarding technology and digital leaders.

To learn more about AI and trust in data, as well as anything else relating to artificial intelligence, please contact Chetan Dabade ([email protected]) and Samikshya Meher ([email protected]).