Banking And Financial Services (BFS) enterprises are accelerating the adoption of agentic Artificial Intelligence (AI) Agents in Banking, autonomous systems that execute multi-step processes, analyze data, and make decisions at scale. From underwriting to fraud detection, these agents are redefining how tasks are performed and raising questions about the future of BFS operations.

The reality, however, is more nuanced. BFS is not only about accuracy and compliance, but it is also about delivering emotionally intelligent, trust-centered customer experiences. This need has intensified in the wake of recent market shifts: from the surge in digital transactions and the associated rise in fraud incidents, to new regulations such as the Financial Conduct Authorities (FCA’s) Consumer Duty in the UK and the United States Securities and Exchange Commissions (SEC’s) growing scrutiny of AI decisioning transparency.

Simultaneously, the entry of crypto and stablecoin ecosystems into mainstream financial frameworks (e.g., the proposed U.S. “Genius Act”) is blurring traditional trust boundaries, pushing banks to reimagine how they build human connection in digital interactions.

Against this backdrop, the concept of humanizing AI agents emerges as the next frontier. By combining agentic autonomy in decision-making with emotional intelligence, BFS firms can create systems that act with speed and precision while also recognizing and responding to human needs and emotions.

Reach out to discuss this topic in depth.

Why humanizing AI Matters in BFS

Agentic AI systems excel at structured, rules-based decisioning: scanning vast datasets, flagging anomalies, and executing transactions with precision. But in the world of BFS, customer interactions frequently span beyond the purely logical. They involve anxiety, trust, aspirations, and the need for reassurance in moments of financial stress or opportunity.

Consider a wealth management client who receives an automated portfolio alert during a sudden market downturn. Traditional AI-driven robo-advisors may mechanically suggest rebalancing to reduce exposure, but such advice, delivered without context or empathy, often fuels anxiety, especially when the client’s financial goals are long term.

Now imagine a humanized AI advisory system that interprets both semantic and emotional cues. It detects concern in the client’s recent chat queries such as “Should I liquidate now?” and pairs that with market volatility data. The agent responds with emotional intelligence: “I understand the markets feel unpredictable right now. Based on your long-term goals, a measured adjustment rather than a full exit may protect your portfolio while maintaining growth potential. Would you like me to schedule a call with your advisor to review this together?”

Here, AI augments human expertise by translating market logic into emotionally resonant reassurance. The outcome is not just accurate advisory but sustained trust, which is a core differentiator in wealth and commercial banking relationships.

This tension between rules and relationships is why personalization has always been a linchpin of BFS success. For decades, banks that remembered customer preferences, anticipated financial needs, and adapted their offerings earned deeper loyalty and higher engagement. In today’s AI-driven, digital-first landscape, that expectation has only intensified: customers now look for interactions that feel individually tailored and emotionally attuned across mobile apps, chatbots, and digital service platforms.

Some banks are already demonstrating the payoff. NatWest, for example, has collaborated with OpenAI to enhance its chatbot “Cora” and virtual assistant “AskArchie”, resulting in a 150% improvement in customer satisfaction and reduced dependency on human advisors, thus demonstrating how AI, when emotionally attuned, can feel more helpful and reassuring during stressful events like fraud alerts.

The same principle applies across lending, wealth management, and capital markets: customers don’t just seek correct answers, they seek empathetic engagement. Humanized AI systems bridge this gap by weaving emotional awareness into workflows, allowing BFS firms to deliver both intelligence and empathy at scale.

What humanizing AI agents bring to BFS

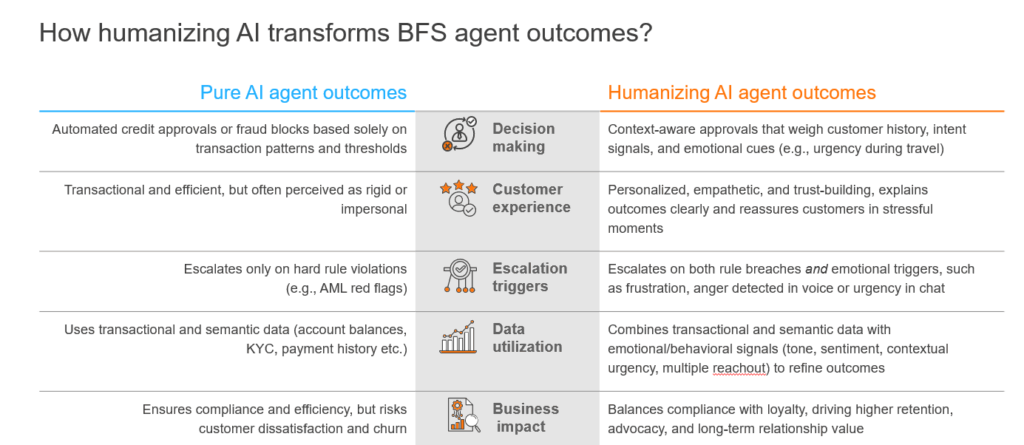

Humanizing AI agents enhances standard agentic AI outcomes by incorporating cues from voice, text, and sentiment. This makes them better suited for trust-based and customer-facing industries like BFS.

Exhibit 1:

This shift is not incremental, it is transformative. It enables BFS enterprises to move beyond delivering a service that is merely fast and compliant, toward experiences that are responsive, human, and anchored in trust. Yet, while several leading BFS firms are piloting emotionally aware AI systems, only a few have scaled them across end-to-end workflows or integrated them into production-grade environments.

At Everest Group, we observe that most institutions currently sit in the early-to-mid maturity stages of emotional AI adoption: focused on proof-of-concept deployments within customer service or fraud operations. Only the most advanced are evolving toward Systems Of Execution (SoE) maturity, where emotional intelligence is embedded into decisioning, advisory, and customer engagement layers. This maturity gap underscores both the transformational potential and the operational challenge of humanizing AI in BFS right up to today.

Leveraging semantic and emotional data together

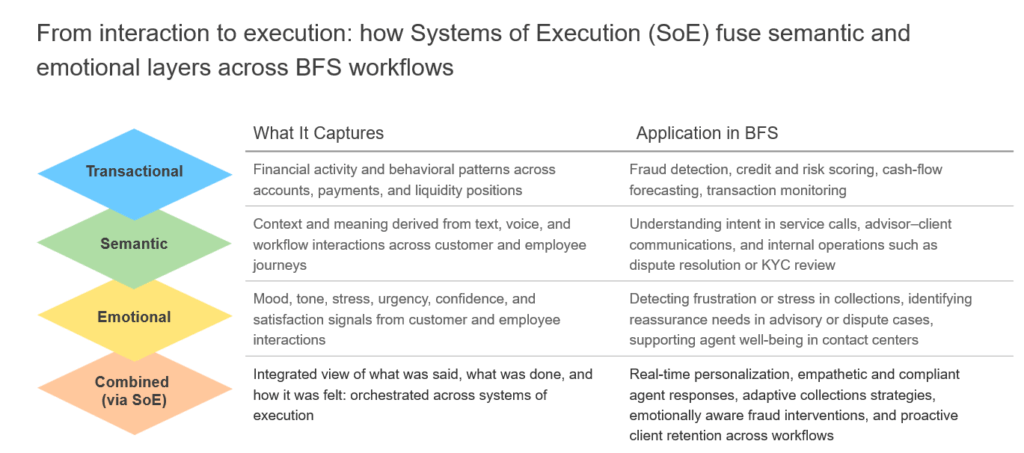

BFS institutions already sit on mountains of semantic data: transaction histories, chat transcripts, call logs, and case notes. These sources capture what customers do and say, but often miss how they feel. Emotional AI adds that missing layer by detecting signals of mood, tone, stress, and satisfaction in real time.

When semantic and emotional data are combined, banks can then move from understanding what happened to understanding why it happened and how to respond. This capability has started to reshape contact center operations, where emotionally aware AI agents detect stress or confusion in customer tone, and adapt responses in real time. However, institutional buyers are now looking to extend this intelligence across the back-office value chain: from collections and fraud operations to advisory and credit decisioning.

The true unlock lies in SoEs, which orchestrate semantic, transactional, and emotional data across multiple workflows. By embedding emotional intelligence into these execution layers, BFS enterprises can create humanized systems that act with both precision and empathy, delivering not just a faster service, but also relationship-led outcomes at scale.

Exhibit 2:

For example, during collections, semantic data may show that a customer has missed two payments. On its own, this could trigger a standard overdue notice. But emotional data might reveal stress in their voice during a follow-up call. Together, these signals could prompt the system to soften the language, offer flexible repayment plans, or escalate the case to a human specialist, thus balancing recovery with empathy and preserving long-term trust.

The technology partner ecosystem for humanizing AI

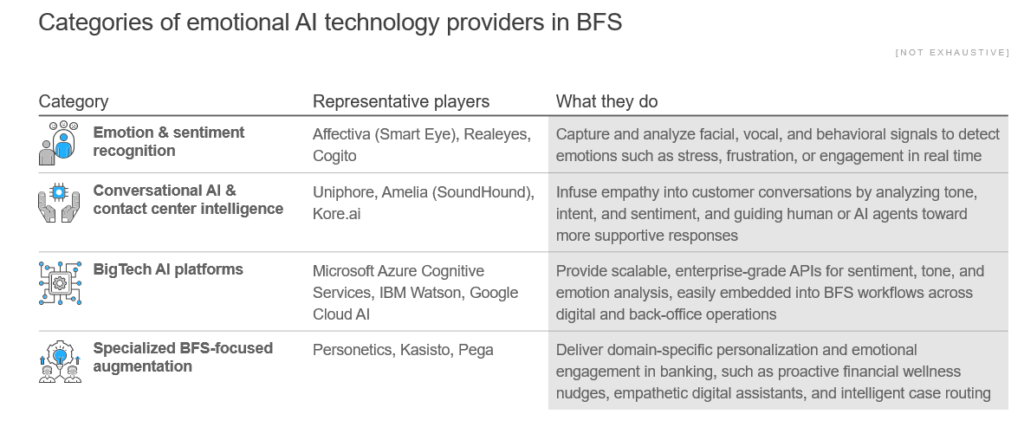

Delivering on this vision of emotionally intelligent workflows requires the right technology stack. BFS enterprises do not need to start from scratch: there is already a diverse ecosystem of technology providers that specialize in different aspects of emotional AI, from real-time sentiment analysis to conversational intelligence. Understanding this landscape is the first step toward selecting the right partners.

Exhibit 3:

Together, these players form a rich partner ecosystem for BFS firms and IT-BP providers to operationalize emotional AI, spanning capabilities from emotion detection (voice, facial, text) and sentiment analysis to conversational intelligence and financial-services-specific augmentation.

While technology players form the backbone of the emotional AI ecosystem, IT Business Partner (IT-BP) service providers play a pivotal role in bringing these capabilities to life within BFS operations. They act as the execution orchestrators, integrating emotional and semantic intelligence from multiple technology cohorts into production workflows such as contact centers, collections, lending, and advisory.

At Everest Group, we observe that Tier 1 financial services firms are increasingly co-developing use cases with hyperscalers and emotional AI specialists, while leveraging IT-BP partners for large-scale implementation, governance, and compliance alignment. In contrast, Tier 2 and mid-market firms often rely more heavily on service providers’ pre-integrated platforms and managed solutions to accelerate deployment and lower the cost of adoption.

This creates a clear two-speed model of ecosystem evolution. Technology providers drive innovation and model capability, while IT-BP partners enable SoE maturity: embedding emotional intelligence into everyday workflows and ensuring it scales reliably across the enterprise.

Opportunities and challenges for BFS enterprises

As we have discussed so far, the BFS industry stands at a turning point. Humanizing AI offers enterprises a chance to reimagine customer engagement and move beyond service models driven solely by speed, compliance, or cost efficiency.

Opportunities

- Hyper-personalized trust signals: Embed real-time emotional intelligence into onboarding, fraud alerts, and advisory, thus turning stressful interactions into moments of reassurance that deepen customer relationships

- Regulatory-grade explainability: Use emotional and semantic data to make AI decisions transparent and defensible. This is critical as regulators sharpen focus on AI usage fairness in lending, fraud detection, and collections

- Next-generation Customer Experience (CX) metrics: Move beyond operational Key Performance Indicators (KPIs) to track trust indices, stress reduction, and emotional satisfaction as measurable drivers of retention and share of wallet

Challenges

- Data fusion at scale: Combining transactional, semantic, and emotional data streams into usable, real-time insights is technically complex and requires governance frameworks not yet mature

- Bias and ethical risk: Emotional data (tone, sentiment, or mood) can vary by culture and context; misinterpretation risks customer alienation and reputational damage

- Talent and cultural shift: Training frontline staff, advisors, and leadership to interpret and act on emotional insights responsibly, rather than treating them as “soft” signals, demands mindset change

Given these opportunities and challenges, BFS leaders will need to carefully prioritize use cases, since not all deliver the same Return on Investment (ROI) or strategic impact.

Exhibit 4: Illustrative BFS use cases with high potential upside for humanizing AI

| Value chain function | Today’s challenge | Humanized AI opportunity |

| Customer onboarding | Digital KYC and ID checks feel rigid; exception handling often frustrates new customers | Blend real-time verification with empathetic exception handling, making onboarding both secure and welcoming |

| Fraud management | False positives trigger blocked transactions, eroding trust despite correct detection | Contextual escalation with empathetic messaging, reassurance during travel or high-stress moments |

| Lending & mortgage | Applicants face anxiety over fairness, affordability, and opaque approval criteria | Transparent, tone-aware responses that explain decisions and provide reassurance on next steps |

| Asset & wealth management | Volatility drives panic and churn; generic alerts fail to calm fears | Sentiment-driven nudges and empathetic advisory interventions that stabilize trust during downturns |

| Collections & recoveries | Debt conversations often generate shame or hostility, leading to disengagement | Stress detection and supportive repayment options that balance recovery with preserving long-term relationships |

Emotional AI agents are most impactful at moments where customer trust is fragile and traditional automation risks alienating customers. These are the emotional “pressure points” of BFS customer journeys, where empathy and reassurance can define the relationship.

The final thoughts and the way forward for humanized AI imperative in BFS

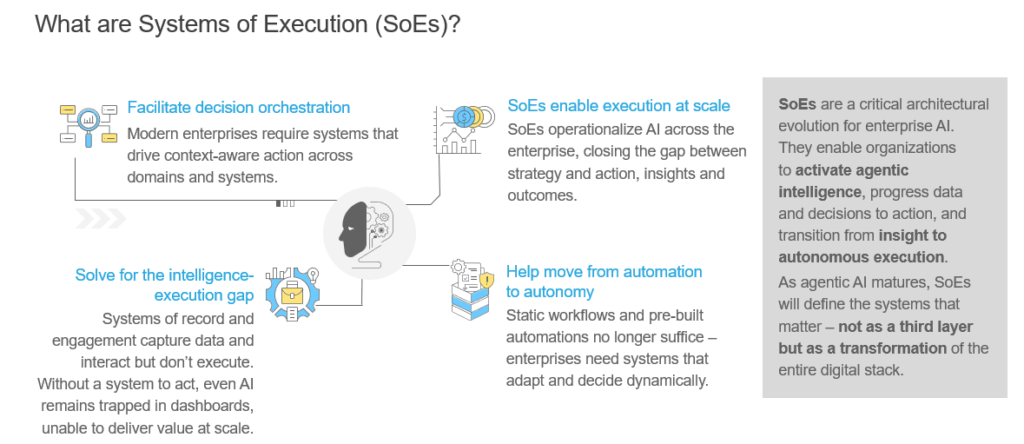

The BFS industry has entered the era of agentic AI. Yet efficiency alone is not enough. Humanized AI agents extend the model, ensuring BFS firms can deliver experiences that are both intelligent and empathetic.

To realize this vision, enterprises will need to combine semantic and emotional data, partner with specialized technology players, and build hybrid models where automation and human empathy reinforce each other.

The operational enabler of this shift is the SoE. By orchestrating semantic, transactional, and emotional data with agentic AI and human oversight, SoE’s create workflows that act with intelligence and empathy at scale.

Exhibit 5:

At Everest Group, we believe that Humanizing AI agents is no longer a side experiment; it is the operating model for next-generation BFS service delivery. The near-term winners will be the firms that move from pilots to production-scaleSoE, , embedding emotional intelligence into real workflows across contact centers, collections, fraud, lending, and advisory.

If you found this blog insightful, you might also enjoy our recent piece, Banking on Autonomous Agents: Embracing Agentic AI in Financial Services, which explores how agentic AI is reshaping the financial services landscape.

For a closer look at a high-impact use case related to this topic, read Google’s Agent Payments Protocol (AP2): A New Chapter in Agentic Commerce, a deep dive into how agentic payments are redefining customer interactions and operational models in BFS.

To discuss more on the implications of humanizing AI agents within the BFS operations landscape, reach out to [email protected], [email protected] and [email protected].