While the numbers vary depending on the source, there are give or take three billion social media users around the world in 2019. With the associated dramatic increase in manipulative and malicious content, there’s been an explosion in the market for content moderation services.

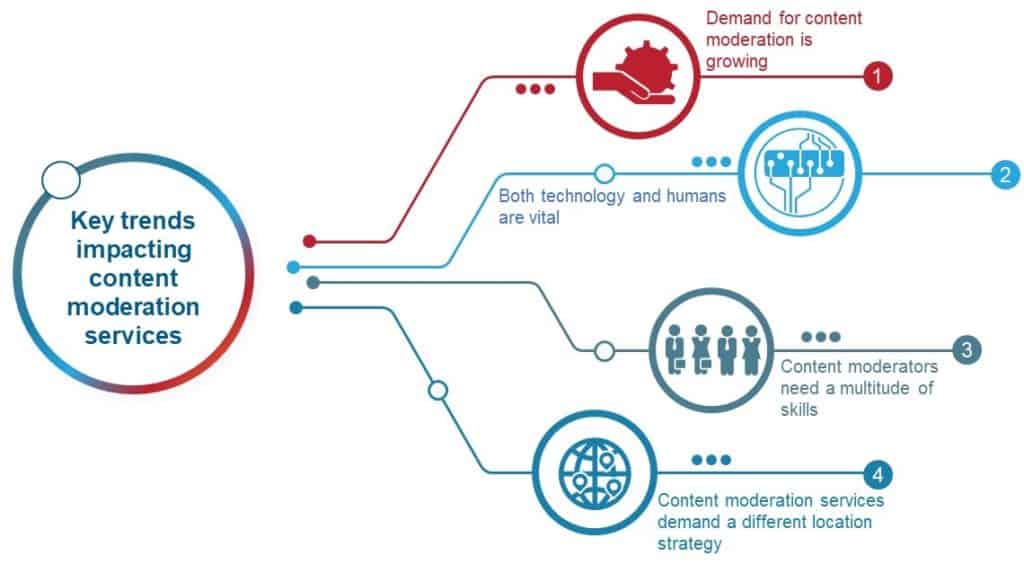

Based on our interactions with leading global enterprises and service providers, here are the four key trends impacting the content moderation services industry.

1. Demand for content moderation is growing

Given the exponential rise of inappropriate online content like political propaganda, spam, violence, disturbing videos, dangerous hoaxes, and other extreme content, most governments have instituted or begun creating policies to regulate social networking, video, and e-commerce sites. As a result, social media companies are facing mounting legislative pressures to curate all content generated on their platforms.

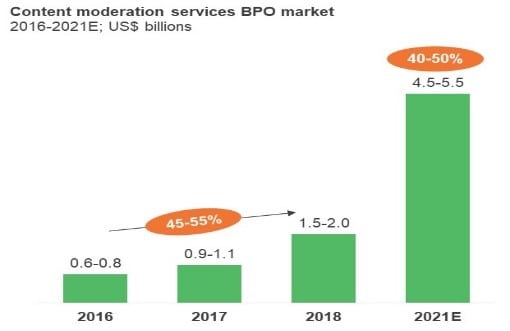

The following image shows how seriously these companies are taking the issue. And note that these numbers only account for outsourced content moderation services, not internally managed content moderation.

Orange boxes indicate CAGR / Y-o-Y growth over the years

2. Both technology and humans are vital

Technological capabilities – ranging from robotic process automation (RPA) to automate repetitive manual process steps, to AI-assisted decision support tools, to AI-enabled task automation of review steps – have certainly emerged as key levers to help social media companies protect their communities and scale their content management operations. For example, established tech giants including Microsoft and Google, as well as fast-growing start-ups, have been investing in developing scalable AI content solutions that deliver faster business value and safer conditions.

While technology will continue to play a big role, it certainly isn’t the be-all, end-all. The judgement-intensive nature of content moderation work requires the human touch. Indeed, with the increasing complexity of the work and the rising regulatory oversight requirements, the need for human employees as part of the content moderation equation will continue to grow significantly.

3. Content moderators need a multitude of skills

Content moderation is an extremely difficult job, at times monotonous and at others disturbing. As not everyone is cut out for the role, companies need to assess candidates against multiple criteria, including:

- Language proficiency, including region-specific slang

- Local context

- Acceptance of ideas that may be contrary to self-held beliefs and personal opinions (e.g., on gender, religion, societal norms, political issues, etc.)

- Ability to adhere to global policies

- Ability/maturity to review content that is explicit in nature

- Exposure to a multi-cultural, diverse society

- Exposure to freedom of expression, both online and offline, and a drive to protect it

- Ability to understand and accept increasingly stringent regulatory policies.

4. Content moderation services demand a different location strategy

Because all countries have unique cultural, regional, and socio-political nuances, the traditional offshore/nearshore-centric location selection strategies that work for standard IT and business process services won’t work for content moderation work. Companies seeking outsourced content moderation services need to look at regional hubs alongside multiple local centers to succeed. In the short-term, this means working with leading providers with hyper-localized delivery centers and rising local providers in the target countries.

Outlook

Here’s what we see coming down the pike in the increasingly complex content moderation space.

- Short-term investments/quick fixes might take precedence over long-term investments

- Until the regulatory landscape stabilizes, companies might need to allocate a disproportionate amount of resources/spend towards compliance initiatives

- Regulatory uncertainty and ambiguity will increase demand for specialist/niche forms of talent, including legal professionals and consultants. Today’s content adjudicators will be displaced by forensic investigators with specialized skills in product, market, legal, and regulatory domains

- Companies must make talent development activities a priority through a specialized focus on structured talent sourcing and training, and strong emphasis on employee well-being through various wellness initiatives

- As AI continues to grow in sophistication, a more defined synergistic relationship between humans and the technology will emerge. AI will be responsible for evaluating massive amounts of multi-dimensional content, and humans will focus on intent and deeper context analysis

- The need for a hyper-local delivery model will prompt enterprises to increasingly explore outsourcing as a potential solution to benefit from service providers’ diversified location portfolios.

To learn more about the content moderation space, please contact Hrishi Raj Agarwalla / Rohan Kapoor / Anurag Srivastava.