Neoclouds are emerging as one of the hottest stories in AI infrastructure, drawing investor enthusiasm and eye-catching valuations. CoreWeave’s share price has more than doubled this year, while Lambda’s $480 million raise at a $4 billion valuation underscores how much capital is flowing into this space.

The momentum comes from a simple but powerful proposition: making AI compute affordable and accessible to a wider set of enterprises and developers.

Partnerships are central to this strategy. NVIDIA’s backing gives Neoclouds credibility and access to top-tier Graphics Processing Units (GPUs). Dell’s launch of liquid-cooled, NVIDIA-powered systems with CoreWeave shows how quickly AI-optimized platforms are being brought to market.

Reach out to discuss this topic in depth.

The power illusion of Neoclouds

Neoclouds have positioned themselves as the answer for enterprises seeking affordable AI infrastructure. CoreWeave, Crusoe, and Lambda have become go-to partners for AI-native startups and developers because of their flexible rental models. Yet focusing only on chip access creates a distorted picture of capability.

Beyond GPU cost and availability: TCO, deployment flexibility, ecosystem support

Enterprise adoption depends on total cost of ownership (TCO), integration, and ecosystem readiness. Networking, storage, and orchestration overheads often offset headline pricing, reducing the affordability advantage. Hyperscalers, with vertically integrated platforms, are better positioned to absorb these costs and provide compliance, governance, and data services.

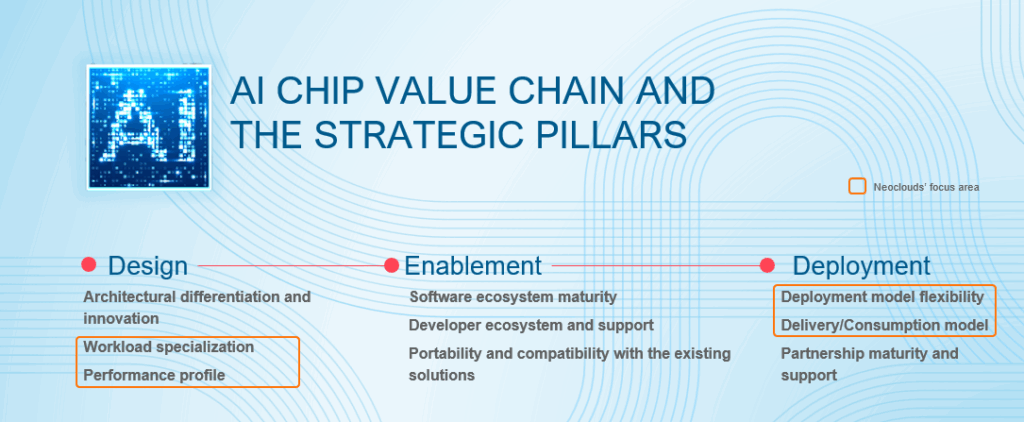

Some Neoclouds such as CoreWeave are beginning to expand with AI-optimized storage and MLOps tooling, but these capabilities remain nascent compared to hyperscalers’ maturity. The AI chip value chain spans design, enable, and deploy, and Neoclouds focus mainly on the deploy layer, while remaining thin in the integration and orchestration layers that drive enterprise-scale impact.

Trade-offs: specialization vs. resilience, agility vs. lock-in, short-term savings vs. long-term viability

Neoclouds win on agility and specialization, making them attractive for startups and burst training needs. But this strength comes with fragility. Heavy reliance on NVIDIA creates exposure to a single supplier with outsized pricing power.

Some providers such as Crusoe are trying to diversify partnering with Advanced Micro Devices (AMD), but the imbalance is significant.

Neoclouds operate on thinner margins and investor funding, leaving enterprises with more risk in long-term bets.

Strategic fit: when Neoclouds make sense vs. when hyperscalers or others are better

Neoclouds has carved out a role by focusing on specialized workloads. They are well suited for large-scale model training, burst capacity for AI-native startups, and sovereign AI projects where infrastructure control is critical. Their customer profile reflects this positioning.

CoreWeave’s largest client is Microsoft, which uses its capacity as an extension of Azure rather than a replacement. Beyond that, most customers are AI startups and specialized providers rather than large enterprises.

So far, this has made Neoclouds more complementary than competitive. Hyperscalers have not prioritized Graphics Processing Unit as a Sercice (GPUaaS) as a standalone service, leaving space for Neoclouds to fill capacity gaps. But with hyperscalers now investing directly in AI chips, the dynamic could shift. If they choose to bundle GPUaaS more aggressively into their platforms, the boundary between partnership and competition may start to blur.

Taken together, these factors suggest that the influence of Neoclouds rests on a narrow base. They fill important gaps in access to high-performance chips, but their ability to support the broader enterprise AI journey remains limited. The next consideration is how enterprises should interpret this positioning when weighing Neoclouds against more integrated alternatives.

Viewing Neoclouds through the ‘Tripple-A Lens’: what to weigh before jumping in

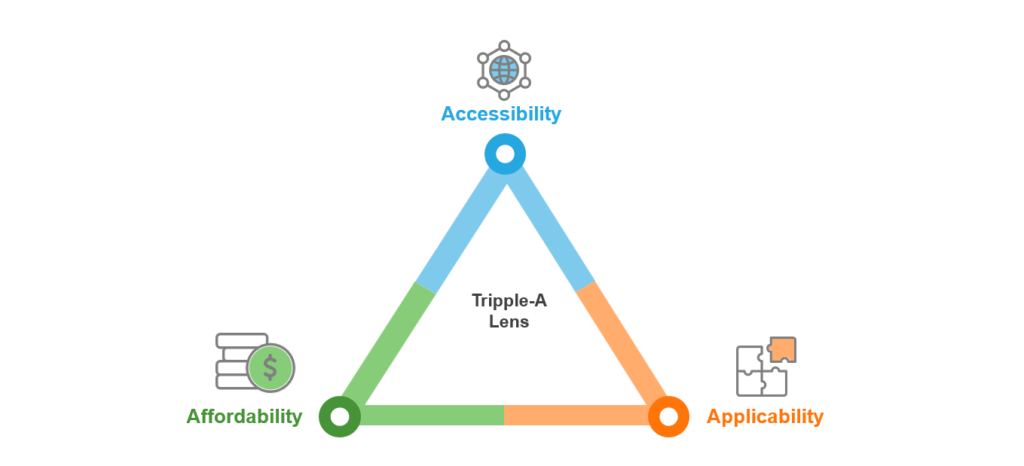

Enterprises evaluating Neoclouds should view it through the Tripple-A Lens: affordability, accessibility, and applicability. Neoclouds perform well on the first two. They lower the cost and offer faster access compared to hyperscalers, where long procurement cycles and opaque pricing models remain pain points.

Applicability, however, is where gaps begin to appear. Enterprise AI adoption requires more than hardware. Integrating workloads into secure, compliant, and globally distributed environments is essential, and hyperscalers have already built deep capabilities across orchestration, governance, and deployment.

Neoclouds often lack this breadth. Their regional footprints remain limited outside the US and Europe, and their platforms do not yet match enterprise requirements for multi-cloud integration or mission-critical continuity. This limits their role to niche use cases such as model training rather than broad enterprise adoption.

For Chief Information Officers (CIOs) and Chief Technology Officers (CTOs) committing to multi-year AI strategies, financial strength matters as much as technical capability. An offering that is compelling today could expose enterprises to continuity risks tomorrow.

The future of Neoclouds: open questions ahead

The path forward for Neoclouds is still uncertain, and several scenarios could shape their trajectory.

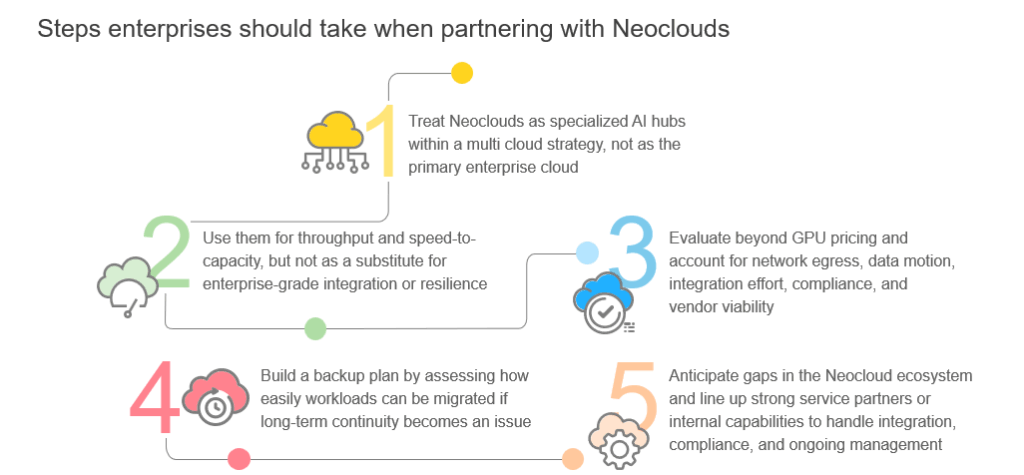

Integration into multicloud? Enterprises may use Neoclouds as niche partners within broader strategies, supplying burst GPU capacity or sovereign workloads. CoreWeave’s role as a supplemental provider to Microsoft shows the potential, but scaling this model remains a question.

Market consolidation? The field is crowded with CoreWeave, Lambda, Crusoe, and others, yet by 2027 most capacity could sit with just two or three players. With NVIDIA backing many of them, smaller providers may struggle to differentiate.

Hyperscaler pushback? If AWS, Azure, or Google cut GPU pricing or expand GPU-as-a-service, Neoclouds could lose their affordability edge. Margins are already thin, leaving them vulnerable to hyperscaler moves.

Shift toward full-stack AI hyperscalers? As Neoclouds add layers such as storage, MLOps, and infrastructure control, are they inching toward becoming mini hyperscalers themselves? CoreWeave’s recent moves suggest it’s possible, but whether these efforts lead to real platform depth or just a more complex version of GPUaaS remains to be seen.

The coming years will decide whether Neoclouds become integral parts of enterprise multicloud, consolidate into a few scale players, or get absorbed by larger incumbents. What remains clear is that their survival hinges on adding applicability and resilience to affordability and accessibility.

If you found this blog interesting, check out our recent blog focusing on Agentic AI: True Autonomy Or Task-based Hyperautomation? | Blog – Everest Group, which delves deeper into a similar topic relating to AI.

To discuss the latest AI trends in more depth, please reach out to Rachita Rao ([email protected]) and Deepti Sekhri ([email protected]).