From Risk to Resilience: The Case for Human + AI Gen AI Training | Blog

Generative AI (gen AI) is no longer on the edge of innovation. It is now embedded into enterprise operations across sectors and industries, from banking and healthcare to education and gaming. Its applications span content generation, fraud detection, personalized engagement, and workflow optimization, amongst others.

As adoption grows, so do the risks and expectations. Gen AI systems are now foundational to enterprise strategy, which makes it essential to examine how they are trained, governed, and aligned with evolving accountability standards.

Reach out to discuss this topic in depth.

Key risks with gen AI output

As gen AI becomes more deeply embedded in enterprise workflows, the risks associated with its outputs also become more pronounced.

- Bias in gen AI outputs remains a critical challenge due to training data sourced from multiple public and digital domains. These inputs often carry embedded stereotypes and underrepresent certain groups, which can lead to skewed or exclusionary responses

- Hallucinations can severely undermine decision quality, increase operational inefficiencies, and weaken customer trust. They reduce the reliability of artificial intelligence (AI)-generated insights and erode stakeholder confidence when surfaced externally

- AI-generated content can be misused for malicious purposes, especially when it is difficult to distinguish from real media. This increases the risk of disinformation, impersonation, and reputational damage, particularly in high-visibility contexts

- Inadequately trained models are more susceptible to prompt exploitation, where seemingly innocuous inputs are used to elicit inappropriate or risky outputs

- Explainability remains a significant challenge. When a gen AI model produces an output, the inability to clearly trace how or why it arrived at that conclusion limits transparency and weakens trust. This lack of interpretability becomes especially problematic in enterprise settings where decisions must be reviewed, justified, and aligned with regulatory or ethical standards.

- When models are not regularly exposed to new, domain-specific, or human-validated inputs, they risk stagnation. This results in repetitive, less relevant outputs that fail to reflect evolving enterprise needs. For instance, a gen AI system trained primarily on financial terminology only in English language may misclassify regional banking terms, leading to miscommunication in localized client interactions

These challenges have tangible consequences for enterprises. Flawed AI behavior can lead to reputational damage, financial loss from misclassification or messaging errors, legal exposure due to noncompliance, and erosion of long-term user trust. Collectively, these risks reinforce the need for organizations to embed safety, explainability, and accountability into every stage of gen AI development and deployment.

Training methodologies for responsible gen AI

Training strategies for gen AI work best when grounded in practical enterprise conditions and real-world model behavior. The aim is to improve performance while ensuring that models remain aligned with domain-specific workflows, compliance expectations, and end-user needs

- Red teaming enables enterprises to proactively uncover vulnerabilities in gen AI systems by simulating adversarial prompts and misuse scenarios. This prepares systems to perform safely under unexpected or manipulative inputs

- Prompt engineering gives organizations control over model behavior by shaping how tasks are framed. It improves the reliability of outputs, especially in regulated or sensitive enterprise contexts

- Reinforcement Learning from Human Feedback (RLHF) enhances alignment by incorporating human judgment into model optimization cycles. This results in outputs that better reflect ethical nuance, contextual sensitivity, and organizational values

- Retrieval-Augmented Generation (RAG) dynamically grounds model responses in live, enterprise-trusted knowledge repositories. This improves factual accuracy, enables version control, and provides clearer audit trails in knowledge-sensitive domains

- Supervised fine-tuning enables precision tuning of gen AI models using domain-specific datasets. It helps embed organizational workflows, compliance language, and task-specific expectations directly into the model architecture

Together, these techniques form a cohesive foundation for building gen AI systems that are not only technically sound but also operationally resilient and aligned with enterprise priorities

Approaches to training gen AI models

The way organizations translate training principles into action, whether through internal development or external partnerships, determines how effectively gen AI systems scale, maintain compliance, and deliver measurable value across enterprise functions.

Organizations typically adopt one of two primary routes:

- Internal training allows enterprises to build and manage gen AI models using proprietary data and internal governance frameworks. This ensures better alignment with domain needs, stronger data privacy, and deeper integration into existing workflows. However, it also demands significant investment in infrastructure, skilled teams, and time

- External training involves collaborating with AI vendors, service providers, research institutions, and academic labs. This accelerates model access and deployment, provides exposure to cutting-edge innovation, and reduces upfront infrastructure costs. On the other hand, it can introduce data security concerns and limit the level of customization and control

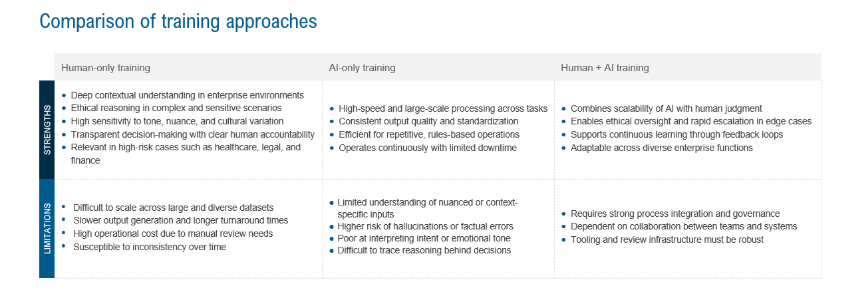

Once a training approach is selected, organizations must also consider the balance between human and AI roles in model training. The choice of methodology has significant implications for gen AI performance.

Hence, human-AI synergy becomes a strategic asset, enabling continuous calibration of gen AI models with evolving enterprise norms, market shifts, and regulatory changes

Final thoughts:

The limitations of human only or AI only training are particularly visible when gen AI is used in complex domains. Human-led models provide contextual judgment but do not scale easily, while AI-led systems offer efficiency but often struggle with ethical reasoning and interpretability. Human + AI training creates a dynamic feedback loop where each side learns from the other. Human reviewers can flag failure patterns, suggest better prompts, or adjust fine-tuning priorities. Over time, these insights can feed back into model retraining, improving both performance and alignment.

A hybrid model offers a balanced alternative. For example, in healthcare, given the criticality, AI can assist with summarizing clinical records while human professionals validate medical accuracy and context. In legal workflows, gen AI may draft contract clauses or case summaries, with legal experts refining language and ensuring compliance. These scenarios reflect how AI and human input complement each other effectively. It combines the speed and consistency of AI with the oversight and contextual awareness of human reviewers. This approach is particularly relevant in high-stakes environments where decisions require both precision and accountability.

To operationalize training in human + AI setting, enterprises need to focus on multiple key areas including investing in moderator tooling to maintain reviewer productivity, defining confidence scoring rules that can support escalation logic, and task segmentation to ensure human effort is prioritized where it adds the most value. Further, enterprises need to define feedback loops to allow learnings to inform model updates over time, and governance frameworks to clarify responsibility and escalation pathways.

As enterprises move from experimentation to deep integration, those who treat training as a strategic, ongoing investment, powered by both humans and AI, will lead to the next wave of responsible gen AI transformation.

If you have any questions, are interested in exploring gen AI training, AI safety, broader trust and safety initiatives or would like to discuss any related themes in more detail, feel free to contact Dhruv Khosla ([email protected]) or Nimisha Awasthi ([email protected]).