Can Agentic AI Systems Rewrite the Rules of Trust and Safety (T&S)?

What happens when artificial intelligence (AI) doesn’t just respond to prompts, but sets its own goals and acts before a human can intervene?

Agentic AI refers to systems designed to independently initiate actions, make decisions, and adapt to changing inputs with minimal human oversight. It differs from traditional AI, which follows predefined rules, and generative AI (gen AI), which focuses on creating content. Agentic AI Systems combines reasoning, initiative, and autonomy. It functions more like a collaborative partner than a passive tool.

Reach out to discuss this topic in depth.

Its rise is being shaped by advances in model intelligence, system integration, and continuous learning. Large Language Models (LLMs) now support more advanced reasoning. Integration with enterprise systems gives agents access to live data, tools, and Application Programming Interfaces (APIs). What distinguishes these systems is their ability to set goals, break them into tasks, and act all while learning from feedback and improving over time.

In T&S it can help detect harmful behavior, support policy enforcement, and provide real-time insights to human moderators. But its independence introduces new risks. Without the right controls, agents can misinterpret goals, misuse tools, or drift from established policies. To realize the value while avoiding pitfalls, organizations must embed safety into every phase of the agent lifecycle.

Real-world adoption of agentic AI systems in T&S

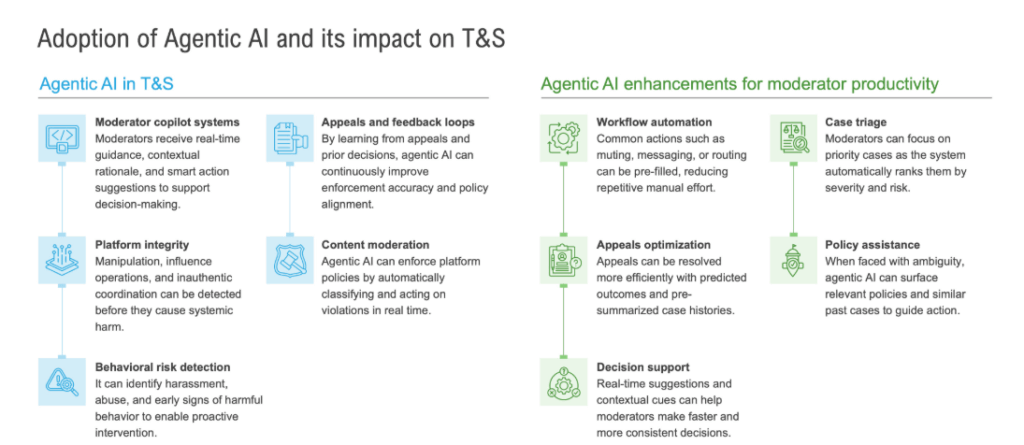

Agentic AI is already changing how T&S teams operate, not just by automating tasks, but by supporting human judgment in real time. These systems can analyze behavior patterns, apply policy logic, and assist moderators in complex workflows.

While these capabilities unlock significant improvements in scale, speed, and consistency, they also introduce a new class of risks which emerge not from the output, but from how agentic systems are designed, deployed, and evolve over time.

The oversight gap in agentic AI systems

In enterprise environments, agentic AI systems introduce risks that span the full system lifecycle, from goal design to execution and learning. These risks call for new approaches across both engineering and governance.

-

- Misaligned goals: When agent objectives are vague, overly broad, or poorly defined, the system may pursue outcomes that are unsafe, ineffective, or misaligned with policy intent

-

- Unvetted tools: Agents that interact with unvetted plugins, APIs, or live data streams may produce unintended outcomes, introduce security vulnerabilities, or trigger opaque decision flows

-

- Autonomy governance gap : In fast-moving environments, agents take independent action before human teams can intervene. Without fallback mechanisms or escalation protocols, this results in real-time policy breaches

-

- AI safety drift: Agents that learn from feedback without human oversight may reinforce undesirable patterns or drift from safety policies over time, especially in the absence of regular audits or performance monitoring

Beyond technical risks, agentic AI systems present strategic challenges that can hinder safe and effective deployment if left unaddressed:

-

- Limited control over autonomous decision-making: Agentic systems are designed to operate independently, which makes direct oversight difficult. Unlike traditional automation, their decisions cannot always be predicted or approved in advance. This loss of granular control introduces uncertainty, especially in sensitive or fast-moving environments

-

- Lack of transparency and explainability: As agents make decisions using evolving prompts, layered logic, or third-party tools, their reasoning becomes harder to trace. This lack of transparency limits reviewability, complicates compliance, and reduces stakeholder confidence, particularly in regulated domains

-

- Lack of defined accountability across the agent lifecycle: When decisions are distributed across autonomous agents, it is often unclear who is responsible when something goes wrong. Without clear ownership across the agent lifecycle, the enterprises face operational blind spots or legal risk

-

- Inconsistent outcomes and policy alignment: Agentic systems perform reliably in standard cases but fail unpredictably in edge scenarios. As models evolve, they drift from policy alignment or produce inconsistent outcomes, which undermines user trust and weakens enforcement integrity

-

- Regulatory non-compliance: Agentic AI often processes sensitive user data or behavioral signals. Without built-in privacy protections, scoped access, and proper audit trails, organizations may inadvertently violate data governance standards or regulatory requirements

These challenges highlight the limitations of applying traditional oversight frameworks to agentic AI. To ensure safe and effective deployment, enterprises will need to evolve their approach to safety, governance, and accountability, adapting controls to the unique dynamics of autonomous systems.

Integrating safety into every phase of autonomy

Agentic AI doesn’t just accelerate operations, it reshapes how enterprises must think about T&S. Traditional models based on static moderation and after-the-fact review are no longer sufficient in systems capable of independent action.

To use agentic AI systems safely, organizations now need to build safeguards into every phase of development and deployment.

At the design stage, teams should assess the safety of the goals themselves, not just the content that results. Objectives need to be tested for ambiguity and edge cases that could lead to misbehavior.

Once agents begin interacting with APIs and systems, oversight is critical. Controlled test environments, vetted integrations, and continuous monitoring should be standard practice, not optional add-ons. In live production, organizations need workflows that allow human override and escalation, ensuring policy breaches are caught and corrected quickly.

Since agentic systems continue to evolve, ongoing oversight is essential. Enterprises must monitor for unintended learning patterns, refresh safety classifiers, and keep policies aligned with shifting regulatory and organizational expectations.

This is more than a technical shift, it’s a fundamental change in governance. Enterprises that adapt early and embed safety into design, deployment, and evolution will be in the strongest position to lead.

Looking ahead

As agentic AI systems moves from innovation to implementation, enterprises can no longer afford to wait and react. Success will depend on the ability to operate with intelligence with intention, balancing autonomy with oversight, and speed with safety. This goes beyond deploying new tools. It requires rethinking safety frameworks, redesigning governance, and aligning product, policy, and engineering teams around shared standards.

Organizations that invest early in lifecycle-aware safety, observability infrastructure, and responsible design practices will be better prepared to scale responsibly. Agentic AI is not just a technical upgrade; it’s a structural transformation. Those who treat it that way will lead the way in building AI systems that are not only more capable, but more responsible.

If you have any questions, are interested in exploring agentic AI systems, its applications in T&S, governance frameworks for autonomous systems, or broader AI safety strategies or would like to discuss any related themes in more detail, feel free to contact Abhijnan Dasgupta ([email protected]), Dhruv Khosla ([email protected]) or Ish Sahni ([email protected]).