Contracting Services with Intelligence: Adapting to the Agentic AI Shift | Blog

Artificial Intelligence (AI) has made tremendous strides in recent years, especially after Generative AI (GenAI) entered the public spotlight in 2023, capturing attention across industries.

Now, with Systems of Execution (SoE) and Agentic AI at large taking capabilities to the next level, the space is only heating up further. Systems of Execution is the next big leap beyond the conventional Systems of Records and System of Engagement. Systems of Execution not only combine the key features of both its predecessors (storing and managing data and enabling interactions between users), but also it is intelligent enough to execute. They are like the intelligent execution layer of the enterprise which can take decisions and execute complex workflows autonomously.

In our previous blog, we looked at how contracting models may evolve with the growing adoption of Systems of Execution. For long there has been a desire to shift to outcome-based models, where a larger portion of the payment is linked to tangible, measurable outcomes.

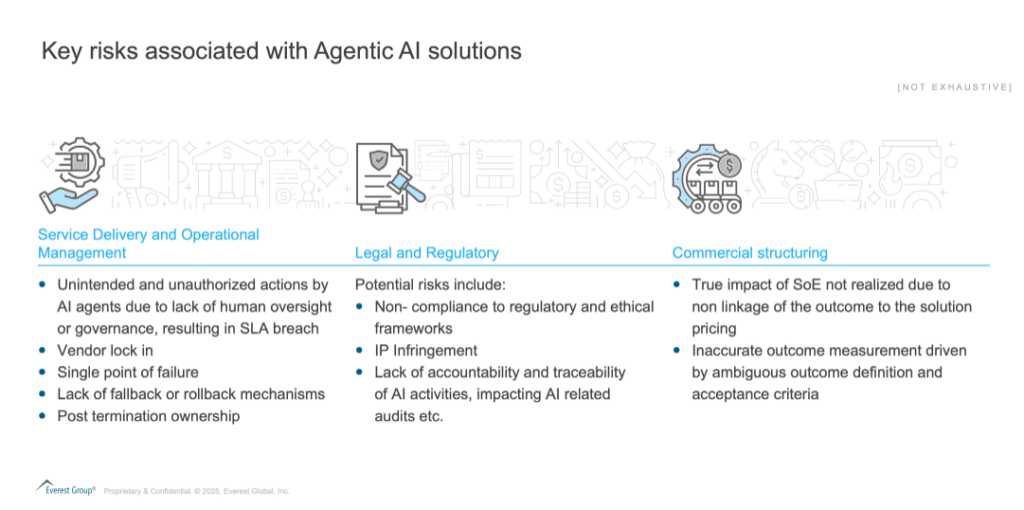

But understanding the shift in pricing model of services is only a part of the overall picture. As Systems of Execution’s adoption grows, buyers are raising concerns about its efficiencies, effectiveness, and foundational readiness. In this blog, we highlight those concerns, key risks and the contractual changes which will address most of it and make Systems of Execution work at scale.

Reach out to discuss this topic in depth.

Agentic AI: Common buyer inquiries

While Systems of Execution and Agentic AI promises productivity and other benefits, it brings a new layer of complexity to the procurement and contractual process. Multiple clauses such as Data privacy and Integrity, legal frameworks, technical integration to commercial constructs are expected to undergo significant change. Among the most pressing are:

- How is data privacy handled by the Systems of Execution solution, especially when it comes to private and sensitive data? What safeguards are in place to ensure that the AI models which are trained on our buyer data are not being leveraged for other clients?

- How is the solution complying with industrial/ regulatory frameworks in the region and ensuring data sovereignty?

- What Key Performance Indicators (KPIs) are required to track the performance, reliability, and fault tolerance of agents?

- Who will own the Agentic AI solution and the generated output? Who will be liable in case of any Intellectual Property (IP) infringement issues?

- How do we protect ourselves from the provider locking in? Will we be able to use the solution post the deal term as well?

- How will responsibility and accountability be divided between AI agents and Human in the Loop (HITL)? In a multi-provider scenario, how will the Agentic solution handle exception hand offs?

- How should we define the outcomes and corresponding Acceptance criteria/Definition of Done?

- Human productivity is measured on Say/Do ratio. Will the same metric be applicable to agentic platforms?

- Will the same termination clauses that are applicable to human labor applicable for Systems of Execution as well?

- We have provisions such as a step in or force majeure for fixing of inadvertent failures. How these provisions work in the complex agent first human led world?

While these questions are pertinent, it is also critical to understand the potential risks these may pose, if not handled effectively.

What clauses should you rethink in the age of Agentic AI?

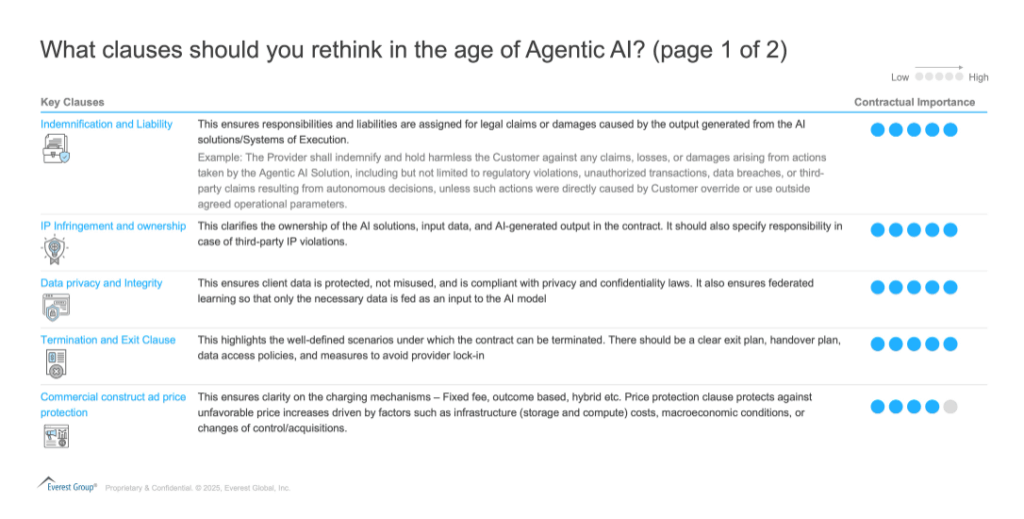

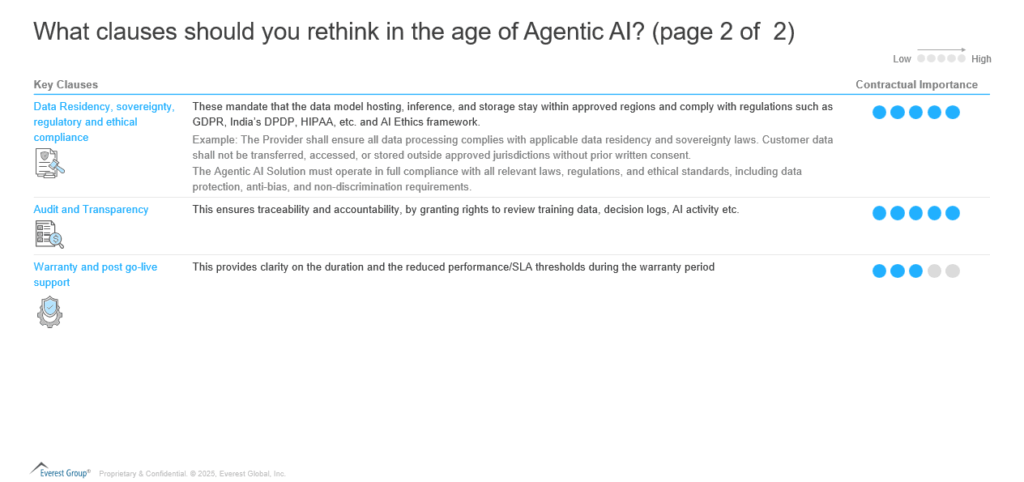

To overcome some of these risks, buyers and providers need to rethink various facets of the contract to safeguard their interests and make the overall contractual clauses more robust and future proof. Below table shows the key clauses, their importance, and examples for some of them.

Along with these contractual nuances, it is critical to look at and redefine the Service Level Agreements (SLAs) and Governance model, to bring in better impact.

In this new system paradigm, traditional SLAs/KPIs might not be effective. Providers need to evolve traditional metrics and introduce AI-specific SLAs that can measure the performance and quantify the impact of Agentic AI systems. These can focus on output accuracy, productivity, and system performance. Some of these metrics can include:

- Human-to-Bot ratio

- Agent availability

- AI coverage

- Self-service rate

- AI-assisted FTE productivity over the deal term

- Order processing rate

Similarly, the governance model should evolve, and the governance board must have the right stakeholders to provide strategic oversight to AI operations. Some of their key responsibilities include:

- Defining a clear Responsible, Accountable, Consulted and Informed (RACI) matrix between Hyperscalers, product vendors, configuration partners, buyers, and any other third parties

- Risk management by overseeing the implementation of risk mitigation strategies and policies as relevant to Systems of Execution layer

- Handling of any issues, exceptions, or escalations related to compliance, accountability, AI generated output etc.

- Stakeholder management to ensure seamless communication and operations

- Having a separate agentic governance layer led by humans to manage the dichotomy between human and agent led issues

- Ensuring change management policies are designed and executed for an AI first world

So, Systems of Execution with an underlying agentic bedrock has the potential to redefine Information Technology (IT) operations and bring in productivity benefits and cost savings for the buyer as well as the provider.

But to realize these benefits, all possible risks must be evaluated, and an actionable plan must be laid out. Considering the risks and complexities, providers and buyers must adopt a pragmatic and strategic approach to contractual agreements to ensure optimal outcomes.

If you found this blog interesting, check out our Agentic AI: True Autonomy Or Task-based Hyperautomation? | Blog – Everest Group, which delves deeper into another topic regarding Agentic AI.

If you have any questions or want to discuss the evolution of Agentic AI in more depth, please contact Prateek Gupta ([email protected]), Duttatreya Jena ([email protected]) and Vinamra Shukla ([email protected]).