Blog

Safeguarding Social Media: How Effective Content Moderation Can Help Clean Up the Internet

The role that social media content plays has been under increasing scrutiny. With increased global regulations mandating the moderation of online content, outsourcing service providers can play a crucial role in helping organizations develop better policies and comply with Content Moderation (CoMo) and Trust & Safety (T&S) standards. Read on to learn the challenges faced by social media platforms in moderating content and how the rest of the ecosystem can supplement their efforts to create safer online spaces.

The importance of creating safer online spaces cannot be overstated, especially in today’s times where social media platforms run the constant risk of becoming the breeding ground for obnoxious content. To ensure that users stay protected from harmful content such as hate speech, violence, abuse, and nudity, social media platforms engage in what is called content moderation. Content moderation activities screen the User Generated Content (UGC) and determine whether a particular content piece, such as tech, image, and video, adheres to the policies of the platform. This process can be performed by both human moderators and automated (AI/ML) enabled machine moderation. The all-encompassing term for content moderation and other activities that are aimed at making online spaces safer is Trust and Safety (T&S).

For moderating content, online platforms can have an in-house team of moderators and technology, or they can outsource the service to a service provider or have a hybrid approach with an optimum mix of both.

Online Content Moderation outsourcing (CoMo) is a rapidly growing market – and for more reasons than one. Driven by increasing mandates for greater moderation of the massive amount of potentially dangerous content being created and shared online, new opportunities have arisen for providers to help enterprises develop comprehensive CoMo and T&S policies and systems, igniting this market.

The need for content moderation has never been greater

For over a decade, online platforms have enabled billions of users worldwide to share content and connect with the world. As of January 2021, out of 4.66 billion active internet users, 90.1% (4.2 billion) are active social media users. Among these numbers is an increasing user base logging on to the social media platforms from increasingly diverse regions of the world. This explosion in the content volume that is generated on social media platforms every day points towards the increasing scale at which content moderation services need to be deployed effectively by social media platforms.

This massive content is also supplemented by the increased variety of this content. This exponential increase in globally created UGC has now moved beyond the written word to include videos, memes, GIFs, and live audio streaming, resulting in more avenues for the bad actors to spread harmful content.

The misuse of online platforms brings imminent repercussions

The COVID-19 pandemic was a grim reminder of how misinformation could spread like wildfire if left unabated. The amount and frequency of social media consumption increased along with content that included inaccurate and misleading information regarding the pandemic thus, potentially complicating the public health response during the peak of COVID-19. Read more in our recent blog, Fighting Health Misinformation on Social Media – How Can Enterprises and Service Providers Help Restore Trust.

Enterprises also have to bear the brunt of inadequate moderation. For example, unmoderated microblogging and social networking service, Parlor, with around 15 million users, was denied listing by Apple, Google, and Amazon Web Services (AWS), for failing to implement content moderation policies. Additionally, the audio-based social media platform, Clubhouse, faced criticism after reports came out of anti-Semitic content released by users of the platform.

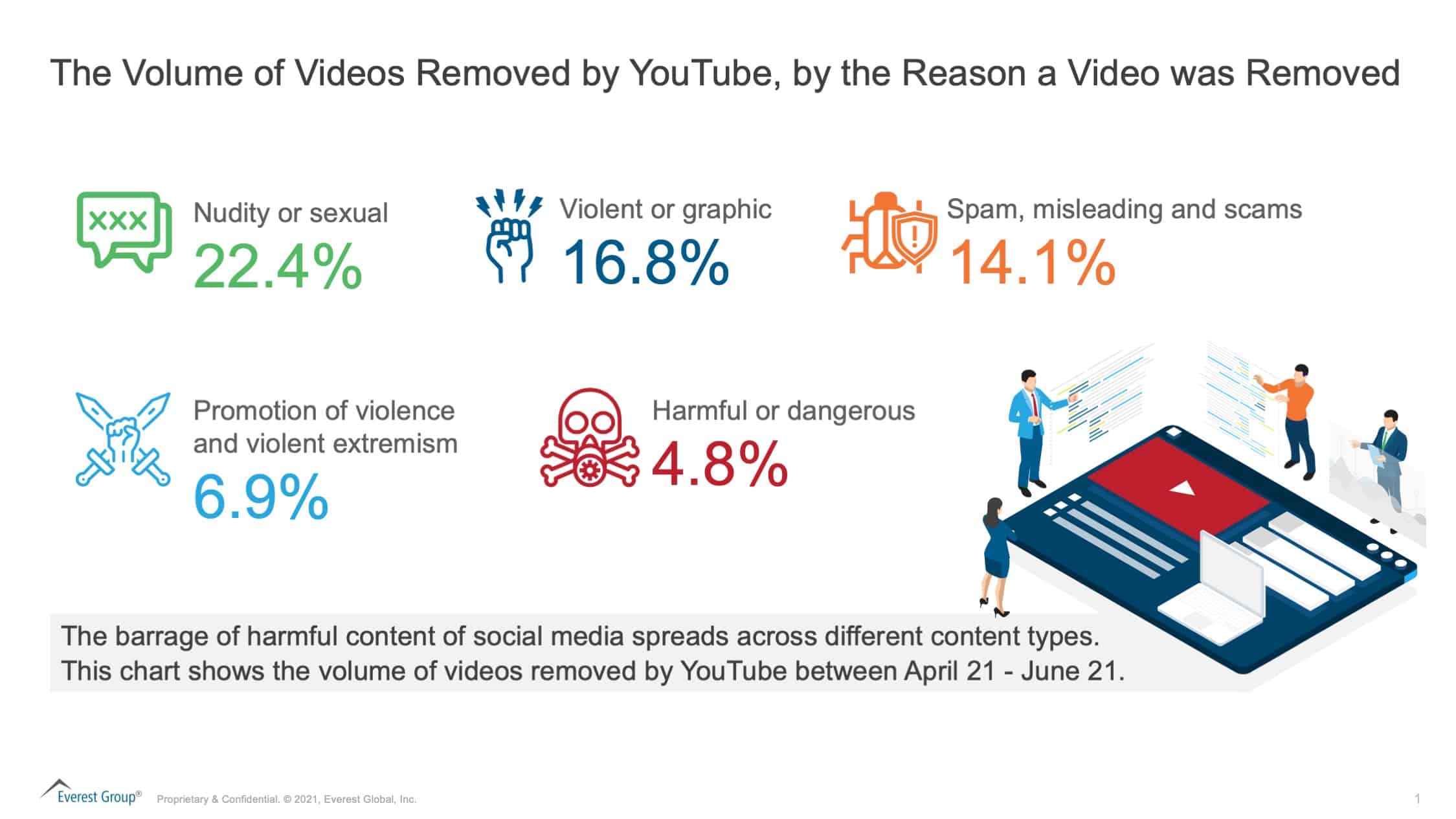

The graphic below illustrates the growing demand to moderate content across social media platforms.

Addressing the problem together

Unfortunately, given the relatively nascent nature of content moderation services and the ever-evolving content threats from bad actors, the playbook for moderating is still emerging and is not sacrosanct.

All stakeholders – the social media platforms, content moderation service providers, the users, the government and regulatory authorities, and the advertisers are concerned about better tackling the issue because there is no ‘ideal state’ that can be pragmatically achieved when it comes to content moderation.

Social media platforms too face their own set of challenges – from creating an automated moderation system that is accurate and reliable enough to ensuring that the right human talent works alongside their technology for safer platforms.

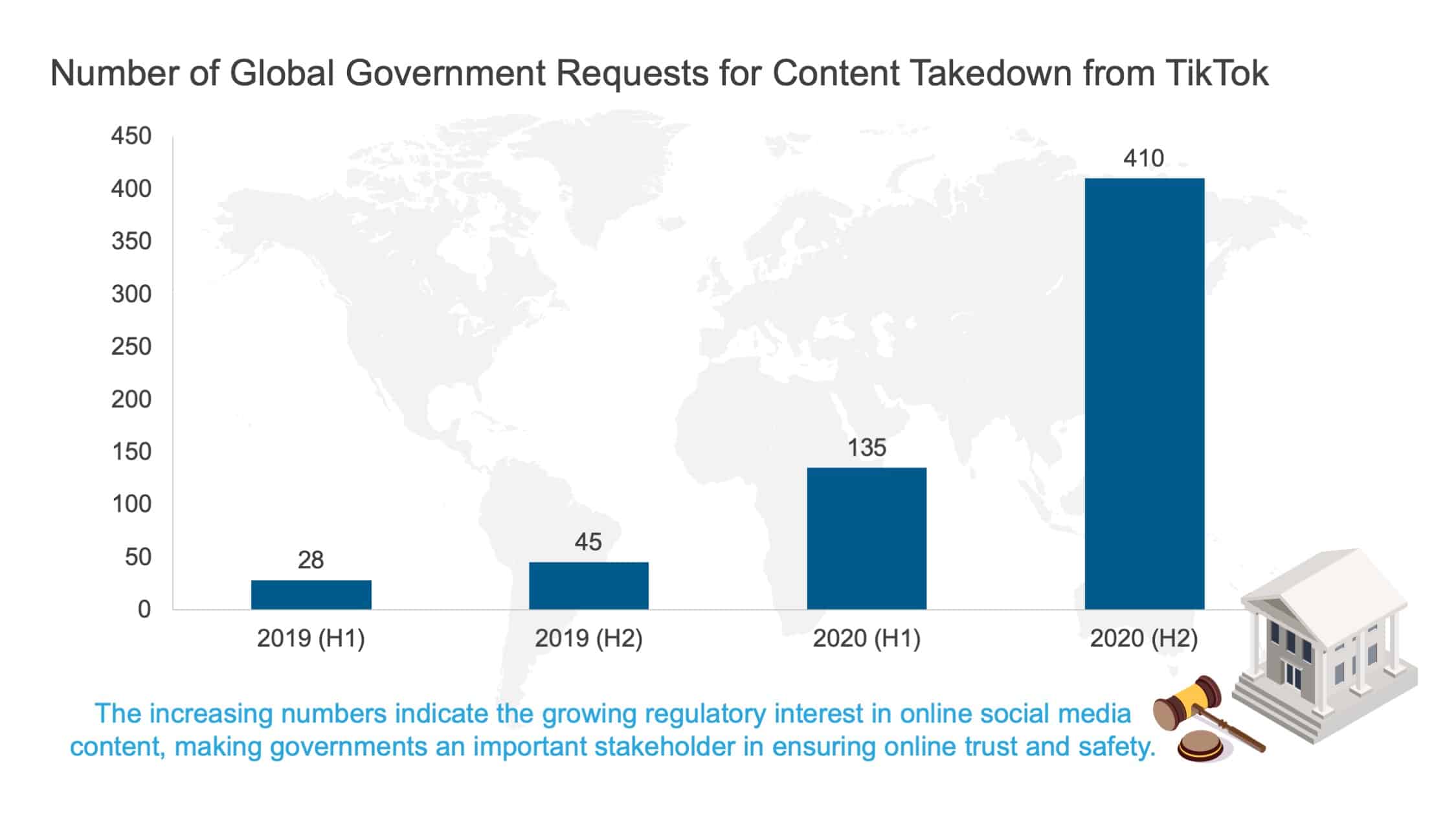

The regulatory interventions around content moderation have increased recently. Countries around the world are implementing new laws that can set frameworks and rules around what content is permissible and what is prohibited. Here too, the social media platforms need to walk the regulatory tightrope to ensure adherence to local laws as well as upholding platform rules and larger laws related to universal human rights. The graphic below shows the increased involvement of the government and regulatory authorities within social media platforms.

How can service providers complement social media platforms in their trust and safety practices?

Service providers are enabling social media platforms by:

Scale of services: Service providers are helping enterprises scale up their trust and safety services according to the unique needs of the enterprises.

Adequate location presence: Knowledge of geographical, cultural, and language level nuances is crucial for determining if content is safe or not. Here service providers are boosting enterprise efforts by providing talent that can adequately moderate local content.

Enabling through technology: Service providers are not only enabling enterprises through human resources but also through technology. On the core moderation technology side, SaaS providers are helping enterprises with pre-trained APIs which are ready to deploy. For example, AI company Clarifai works with the social media platform 9GAG, checking 20,000 pieces of UGC daily for gore, drugs, and nudity with 95% accuracy compared to a human view.

Marrying automation with human moderation is the best way forward

Human moderators, in their efforts to keep our online spaces clean, get exposed to the harmful content which can have effects on their own wellbeing. Hence in order to reduce such exposure, automation is the strongest bet. But there lies certain challenges.

Unfortunately, the automated AI systems still haven’t reached a reliability where they can be trusted to moderate all internet content. AI is yet to recognize the nuances that are present behind a content piece. In fact, at the onset of the pandemic, in the second quarter of 2020, when YouTube decided to increase the involvement of the content moderated by its AI systems, ~11 million videos got removed from the platform, which was alarmingly higher than the usual rate. Around 320,000 of these video takedowns were then appealed, and half of them were reinstated, which was roughly double the usual figure.

Hence, the human-AI synergy for content moderation is our best way forward as the AI learns and evolves from human decisions in a bid to become more accurate in its working.

Enough work to do for years to come

We are in the early stages of watching the CoMo market’s growth, and the answer to the “HOW” of keeping our online spaces clean can be found by the collective efforts of all stakeholders – governments can frame guidelines and legislations, civil society groups can help enterprises through research, and service providers can help enterprises scale their CoMo efforts. And as we endeavor to move towards such a state, here’s a shoutout to our content moderators, who strive day in and day out, to ensure that our online spaces remain cleaner, safer and bring the world together.

Learn more in our recent State of the Market Report, Content Moderators: Guardians of the Online Galaxy.