Blog

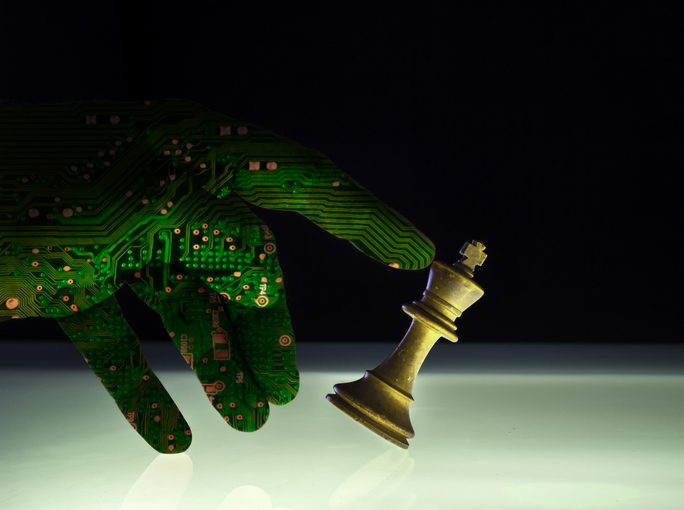

From Sci-Fi to Reality: Unraveling the Risks of Superintelligence

Superintelligence promises incredible advancements and solutions to the world’s biggest challenges, yet it also presents an ominous threat to society. As the lines between innovation and catastrophe blur, understanding the risks of AI is crucial. Read on for recommendations for moving forward in this uncharted territory.

Generative Artificial Intelligence’s emergence has led enterprises, tech vendors, and entrepreneurs to explore many different use cases for this disruptive technology while regulators seek to comprehend its wide-ranging implications and ensure its responsible use. Learn how enterprises can leverage GAI in our webinar, Welcoming the AI Summer: How Generative AI is Transforming Experiences.

Concerns persist as tech visionaries warn that AI might surpass human intelligence by the end of the decade. Humans are still far from fully grasping its potential ramifications and understanding how to collaborate with the technology and effectively mitigate its risks.

In a groundbreaking announcement in July, OpenAI unveiled that it has tasked a dedicated team with creating technologies and frameworks to control AI that surpasses human intelligence. It also committed to dedicating 20% of its computing resources to address this critical issue.

While initially exciting, the prospect of superintelligence also brings numerous challenges and risks. As we venture into this uncharted territory, understanding AI’s evolution and its potential implications on society becomes essential. Let’s explore this further.

Types of AI

AI can be broadly categorized into the following three types:

- Narrow AI

Narrow AI systems are designed to excel at specific tasks, such as language translation, playing chess, or driving autonomous vehicles. Operating within well-defined boundaries, they cannot transfer knowledge or skills to other domains. Common examples include virtual assistants like Siri and Alexa, recommendation algorithms on streaming platforms, and image recognition software.

- General AI

General AI possesses human-like cognitive abilities and can perform various intellectual tasks across various domains. Unlike narrow AI, general AI has the potential to learn from experiences and apply knowledge to different scenarios.

- Super AI (Superintelligence):

Super AI represents a hypothetical AI that surpasses human cognitive abilities in all domains. It holds the promise to solve complex global challenges, such as climate change and disease eradication.

Tech thinkers across the globe have raised an alarm

Amidst growing concerns about the risks of superintelligence, the departure of Geoffrey Hinton, known as “the Godfather of AI,” from Google was one of the most significant developments in the AI realm. Hinton is not alone in his concern about AI risks. More than 1,000 tech leaders and researchers have signed an open letter urging a pause in AI development to give the world a chance to adapt and understand the current developments.

These leaders emphasized that development should not be done until we are certain that the outcomes will be beneficial and when the AI risks are fully known and can be managed.

In the letter, they highlighted the following five key AI risks:

- Machines surpassing human intelligence: The prospect of machines becoming more intelligent than humans raises ethical questions and fears of losing control over these systems. Ensuring that superintelligence remains beneficial and aligned with human values becomes crucial

- Risks of “bad actors” exploiting AI chatbots: As AI technologies evolve, malicious actors can potentially exploit AI chatbots to disseminate misinformation, conduct social engineering attacks, or perpetrate scams

- Few-shot learning capabilities: Superintelligent AI might possess the ability to learn and adapt rapidly, presenting challenges for security and containment. Ensuring safe and controlled learning environments becomes essential

- Existential risk posed by AI systems: A significant concern is that superintelligent AI could have unintended consequences or make decisions that could jeopardize humanity’s existence

- Impact on job markets: AI’s rapid advancement, especially superintelligence, might disrupt job markets and lead to widespread unemployment in certain sectors, necessitating measures to address this societal shift

As we already have seen some risks associated with this technology materialize, cautiously approaching the advancement of its progress is necessary.

Recommendation for moving forward

To mitigate AI risks and the risk of superintelligence while promoting its development for positive societal outcomes, we recommend enterprises take the following actions:

- Create dedicated teams to monitor the development – The government needs to appoint relevant stakeholders in regulatory positions to monitor and control these developments, particularly to protect the large population that does not understand the technology from its potential consequences

- Limit the current development – As the letter suggested, the government should implement an immediate moratorium on developing and using certain types of AI. This pause would give everyone enough time to understand the technology and associated risks better. While Italy has used its legal architecture to temporarily ban ChatGPT, efforts like this will not have a significant impact if carried out individually

- Define policies – Regulatory agencies should start working on developing policies that direct researchers on how to develop the technology and define key levels for alerting regulatory agencies and others

- Promote public awareness and engagement – Promoting awareness about AI and superintelligence is crucial to facilitate informed debates and ensure the technology aligns with societal values

- Form international collaborations – Isolated initiatives won’t help the world. Larger collaboration among governments to define regulations and share knowledge is needed

While new technologies have always brought changes to the existing norms, disrupted established industries, and transformed societal dynamics, ensuring these advancements are beneficial to a larger audience is essential.

To discuss the risks of Generative AI, its use cases, and its implications across different industries, contact Niraj Agarwal, Priya Bhalla, and Vishal Gupta.