Blog

The Rise of Machine Learning Operations: How MLOps Can Become the Backbone of AI-enabled Enterprises

We’ve seen enterprises developing and employing multiple disparate AI use cases. But to become a truly AI-enabled enterprise, many standalone use cases need to be developed, deployed, and maintained to solve different challenges across the organization. Machine Learning Operations or MLOps offers this promise to seamlessly leverage the power of AI without hassle.

Everest Group is launching its MLOps Products PEAK® Matrix Assessment 2022 to gain a better understanding of the competitive service provider landscape. Discover how you can be part of the assessment.

With the rise in digitization, cloud, and internet of things (IoT) adoption, our world generates petabytes of data every day that enterprises want to mine to gain business insights, drive decisions, and transform operations.

Artificial Intelligence (AI) and Machine Learning (ML) insights can help enterprises gain a competitive edge but come with developmental and operational challenges. Machine Learning Operations (MLOps) can provide a solution. Let’s explore this more.

While tools for analyzing historical data to gain business insights have become well-adopted and easier to use, using this information to make predictions or judgment calls is a different ball game altogether.

Tools that can deliver these capabilities based on programming languages such as Python, SAS, and R are known as data science or Machine Learning (ML). Popular deep learning frameworks include Tensorflow, Jupyter, and PyTorch.

Over the past decade, these tools have gained traction and have emerged as attractive options to develop predictive use cases by leveraging vast amounts of data to assist employees in making decisions and delivering consistent outcomes. As a result, enterprises can scale processes without proportionately increasing employee headcount.

Machine Learning varies from traditional IT initiatives as it does not take a one-size-fits-all approach. Earlier data-scientist implementation teams operated in silos, worked on different business processes, and leveraged disparate development tools and deployment techniques with limited adherence to IT policies.

While the benefits promised are real, replicating them across geographies, functions, customer segments, and distribution channels, all with their own nuances, called for a customized approach across these categories.

This led to the development of a plethora of specialized models that individual business teams had to be kept informed of as well as significant infrastructure and deployment costs.

Advances in ML have since driven software providers to offer approaches to democratize model development, making it possible to now create custom ML models for different processes and contexts.

MLOps to the rescue

In today’s world, developing multiple models that serve different purposes is less challenging. Enterprises who want to successfully become AI-enabled and deploy AI at scale need to equip individual business teams with model deployment and monitoring capabilities.

As a result, software vendors have started offering a DevOps-style approach to centralize and support the deployment requirements of a vast number of ML models, with individual teams focusing only on developing models best suited to their requirements.

This new rising methodology called MLOps is a structured approach to scaling ML across organizations that brings together skills, techniques, and tools used in data engineering and machine learning.

What’s needed to make it work

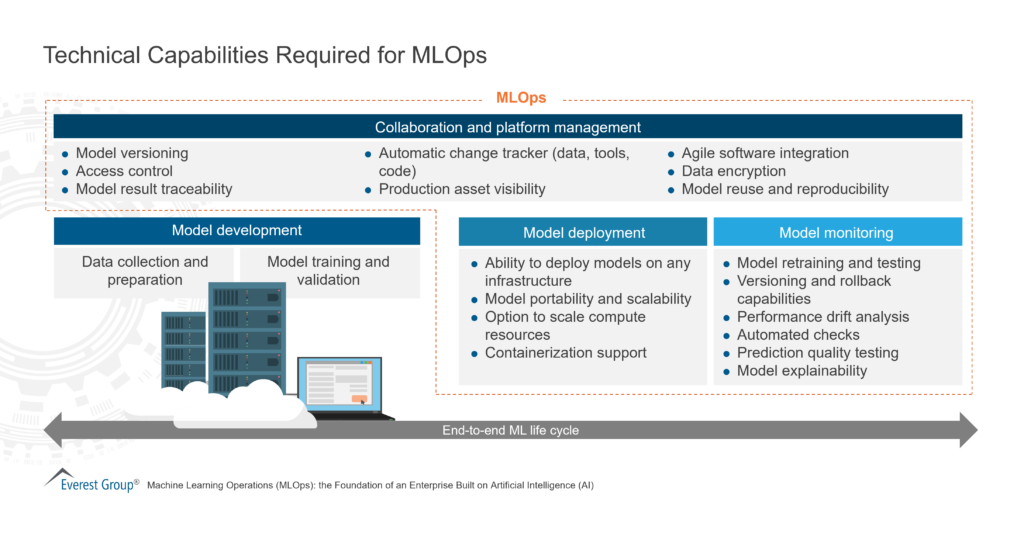

MLOps assists enterprises in decoupling the development and operational aspects in an ML model’s lifecycle by bringing DevOps-like capabilities into operationalizing ML models. Technology vendors are offering MLOps to enterprises in the form of licensable software with the following capabilities:

- Model deployment: In this important stage, the ability to deploy models on any infrastructure is important. Other features include storing an ML model in a containerized environment and options to scale compute resources

- Model monitoring: Tracking the performance of models in production is complex and requires a carefully designed performance measurement matrix. As soon as models start showing signs of declining prediction accuracy, they are sent to the development team for review/retraining

- Collaboration and platform management: MLOps solutions offer platform-related features such as security, access control, version control, and performance measurement to enhance reusability and collaboration among various stakeholders, including data engineers, data scientists, ML engineers, and central IT functions

Additionally, MLOps vendors offer support for multiple Integrated Development Environments (IDEs) to promote the democratization of the model development process.

While various vendors offer built-in ML development capabilities within their solutions, connectors are being developed and integrated to support a wide array of ML model file formats.

Additionally, the overall ML lifecycle management ecosystem is rapidly converging, with vendors developing end-to-end ML lifecycle capabilities, either in-house or through partner integrations.

MLOps can promote rapid innovation through robust machine learning lifecycle management, increase productivity, speed, and reliability, and reduce risk – making it a methodology to pay attention to.

Everest Group is launching its MLOps Products PEAK® Matrix Assessment 2022 to gain a better understanding of the competitive landscape. Technology providers can now participate and receive a platform assessment.

To share your thoughts on this topic, contact [email protected] and [email protected].