Blog

Decoding the EU AI Act: What it Means for Financial Services Firms

How will the EU AI Act impact the financial services sector, and how should enterprises and service providers structure their compliance activities? Read on to learn about what this new legislation means for financial services firms looking to implement AI tools, or get in touch to understand the direct impact on your specific business.

In recent years, the rapid advancements in artificial intelligence — in particular, generative AI — have revolutionized various sectors, including financial services. Technology giants such as Microsoft, Google, Amazon, and Meta have heavily invested in developing AI models and tools. However, this unprecedented growth has also raised concerns about the potential risks associated with the unchecked use of AI, prompting the need for regulations to ensure the responsible development and deployment of these powerful technologies.

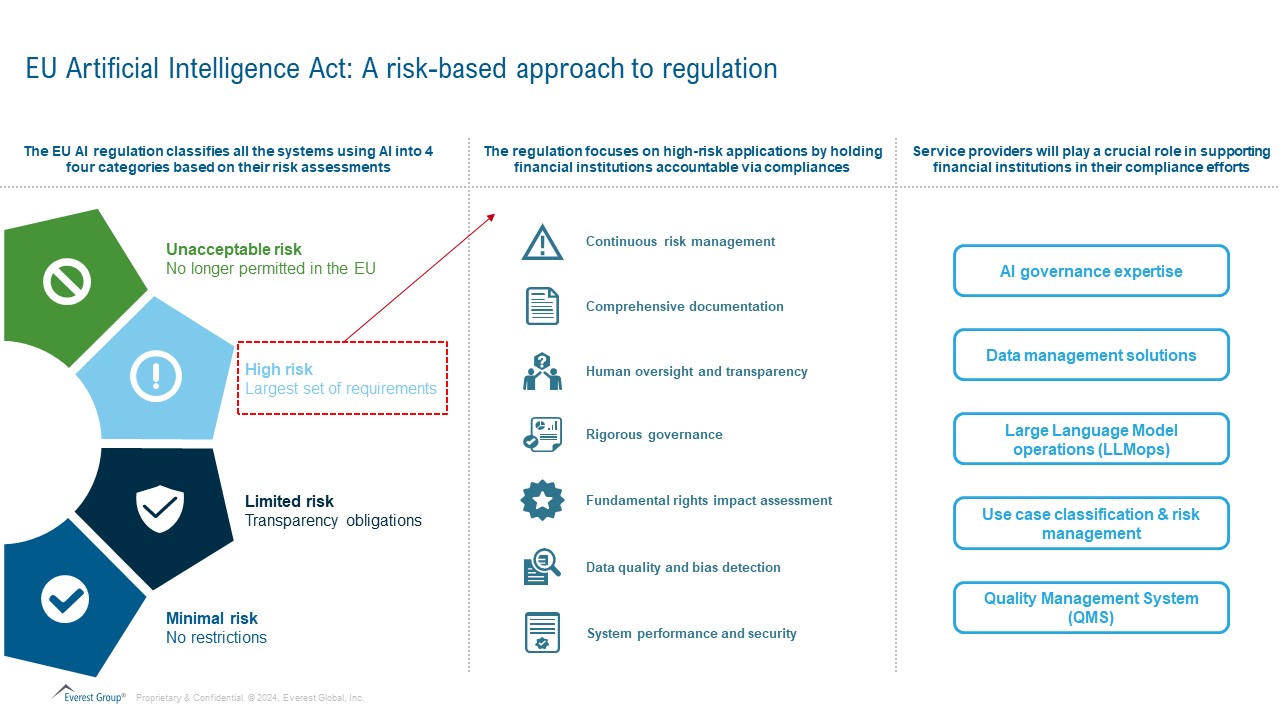

Recognizing the urgency of the situation, the European Union has taken a proactive step by introducing the AI Act, a pioneering piece of legislation that aims to establish a comprehensive framework for the development and use of trustworthy AI systems. The Act adopts a risk-based approach, categorizing AI systems into four distinct levels:

- Unacceptable risk – Systems deemed a serious threat, such as predictive policing, real-time biometric identification systems, and social scoring and ranking are banned

- High-risk – Systems with potential to harm people or fundamental rights, such as AI-powered credit assessments, require strict adherence to new rules regarding risk management, data training, transparency, cybersecurity, and testing. These systems need to register with a central EU database before distribution

- Limited risk – Systems posing minimal risk, such as chatbots, need to comply with “limited transparency obligations,” such as labeling AI-generated content

- Low or minimal risk – While not mandated, the Act encourages providers to follow a code of conduct similar to high-risk systems for market conformity

The AI Act and financial services

The financial services industry heavily relies on AI, from personalized banking experiences to fraud detection. The high-risk applications especially require financial institutions to prioritize the following:

- Continuous risk management – Focus on health, safety, and rights throughout the AI lifecycle, including regular updates, documentation, and stakeholder engagement

- Comprehensible documentation – Maintain clear, up-to-date technical documentation for high-risk systems, including characteristics, algorithms, data processes, risk management plans, and automatic event logging

- Human oversight and transparency – Maintain human oversight throughout the AI lifecycle and ensure clear and understandable explanations of AI decisions

- Rigorous governance – Implement robust governance practices to prevent discrimination and ensure compliance with data protection laws

- Fundamental rights impact assessment – Conduct thorough assessments to identify and mitigate potential risks to fundamental rights

- Data quality and bias detection – Ensure training and testing datasets are representative, accurate, and free of bias to prevent adverse impacts

- System performance and security – Ensure consistent performance, accuracy, robustness, and cybersecurity throughout the lifecycle of high-risk AI systems

To align with the EU AI Act, enterprises must take a structured approach. First, they should develop a comprehensive compliance framework to manage AI risks, ensure adherence to the Act, and implement risk mitigation strategies. Next, they need to take inventory of existing AI assets like models, tools, and systems, classifying each into the four risk categories outlined by the Act. Crucially, a cross-functional team should be formed to oversee AI risk management, drive compliance efforts, and execute mitigation plans across the organization. By taking these steps, enterprises can future-proof their AI initiatives while upholding the standards set forth by the landmark regulation.

Opportunities for service providers

- AI governance expertise – Service providers can offer expertise in building and implementing AI governance frameworks that comply with the EU AI Act. This includes developing policies, procedures, and tools for responsible AI development and deployment

- Data management solutions – Service providers can assist financial institutions in managing their data effectively for AI purposes. This includes data cleaning, labeling, and ensuring data quality and compliance

- Large Language Model operations (LLMops) – As financial institutions explore the use of Large Language Models (LLMs), service providers can provide expertise in LLMOps, which encompasses the processes for deploying, managing, and monitoring LLMs

- Use case classification & risk management – Service providers can help financial institutions classify their AI use cases according to the EU AI Act’s risk framework, and develop appropriate risk management strategies

- Quality Management System (QMS) – Implement a robust QMS to ensure the AI systems consistently meet the Act’s requirements and other emerging regulatory standards

The road ahead

As the AI Act progresses through the legislative process, financial institutions and service providers must proactively prepare for the upcoming changes. This includes conducting AI asset inventories, classifying AI systems based on risk levels, assigning responsibility for compliance, and establishing robust frameworks for AI risk management. Service providers will play a crucial role in supporting financial institutions in their compliance efforts.

To learn more about the EU AI Act and how to achieve compliance with the regulations, contact Ronak Doshi, [email protected], Kriti Seth, [email protected] and Laqshay Gupta, [email protected]. Understand how we can assist in managing AI implementation and compliance, or download our report on revolutionizing BFSI workflows using Gen AI.