Blog

Data stack has entered its faultline shift: IBM’s $11B message to the market

Reach out to discuss this topic in depth.

IBM’s Next Chapter: Unifying Data, Cloud, and AI

On December 8, 2025, IBM announced its US$11B all-cash acquisition of Confluent, expected to close in mid-2026, with Confluent retained as a distinct brand. The deal strengthens IBM’s data, Artificial Intelligence (AI), automation, and consulting portfolio and is expected to generate US$500M in run-rate synergies through scale and productivity gains.

Beyond portfolio expansion, the acquisition reflects how enterprises are reshaping their data foundations for AI. Real-time data has become essential for agentic systems, which can make flawed decisions when operating on stale or batch inputs, especially in high-throughput environments like banking, payments, and logistics. Trustworthy, streaming data is now a credibility threshold for enterprise AI, and this move positions IBM to anchor that capability more deeply across its stack.

IBM’s Mergers & Acquisition (M&A) trail reads like a map of what the modern data stack for AI success should be. Red Hat gave IBM a consistent execution layer across environments, HashiCorp added the ability to deploy and manage those environments across clouds and DataStax brought a cloud-native database built for AI-era workloads. Confluent is the next logical piece: the data-in-motion fabric that keeps this stack synced and current.

The strategic synergy is clear: Confluent’s footprint across more than 40% of the Fortune 500 strengthens IBM’s position in large U.S. enterprises, while IBM’s global reach and consulting muscle give Confluent access to markets where its presence has been limited. IBM also has the scale to accelerate Confluent’s current investments, particularly around governance, streaming-native workloads, and enterprise-grade tooling. What remains to be seen is how this momentum aligns with Confluent’s existing identity. Confluent has grown through a clear, streaming-focused roadmap and strong ties to the Kafka community – traits that set it apart from similar hyperscaler offerings. IBM’s past integrations span both successes and softer landings, so the question is whether Confluent can maintain its rhythm and influence in the market while becoming part of IBM’s broader portfolio.

What this acquisition changes in the market

For enterprises:

Confluent customers will look for continuity, particularly around pricing, neutrality, and cloud-agnostic deployment. IBM clients may see this as a way to pull real-time data closer to the center of their architecture. The impact will be more immediate for enterprises that use both, with the transition period becoming the main variable as tools are aligned, integration patterns adjust, and the direction of the combined offering becomes clearer.

For supply-side providers:

The acquisition brings one of the leading independent streaming specialists into a larger platform portfolio, prompting other providers to rethink their investments in this space. Some may accelerate their own streaming roadmaps; others may look to acquire emerging players to fill gaps. For service providers (SPs), the deal opens space for deeper work around IBM and Confluent but also raises expectations: clients will expect stronger real-time data skills in every engagement. SPs will also have to step up in clarifying enterprise doubts around what changes, while also recalibrating their own partnership strategies with IBM and Confluent.

For the data and AI landscape:

Confluent has shaped Kafka’s direction for years, so its move into IBM naturally raises questions about roadmap cadence, long-term alignment, and how its ecosystem relationships evolve over time. Existing partnerships add another layer of complexity: Confluent’s close ties with hyperscalers and data platforms have been central to its growth, and it remains to be seen how those relationships are shaped as IBM brings Confluent into its broader data and AI agenda. As Confluent’s direction under IBM becomes clearer, we expect more movements in the streaming space in the coming months.

One thing is clear: AI success cannot wait, and neither can your data stack

Microsoft’s US$25B data-center push, Google’s US$15B AI hub investment, and similar AWS commitments underscore hyperscalers’ accelerating focus on AI data readiness. The Global AI Infrastructure Investment Partnership (Microsoft, BlackRock/GIP, MGX, NVIDIA, and xAI) targets up to US$100B to build AI-ready data centers and power the infrastructure. All these moves point to a truth the industry cannot dodge anymore: AI runs on data, and without a rock-solid data foundation, the whole AI dream collapses under its own hype. AI’s rapid trajectory leaves little room to dawdle and will push enterprises to focus on data-stack investments or risk missing the early waves of adoption.

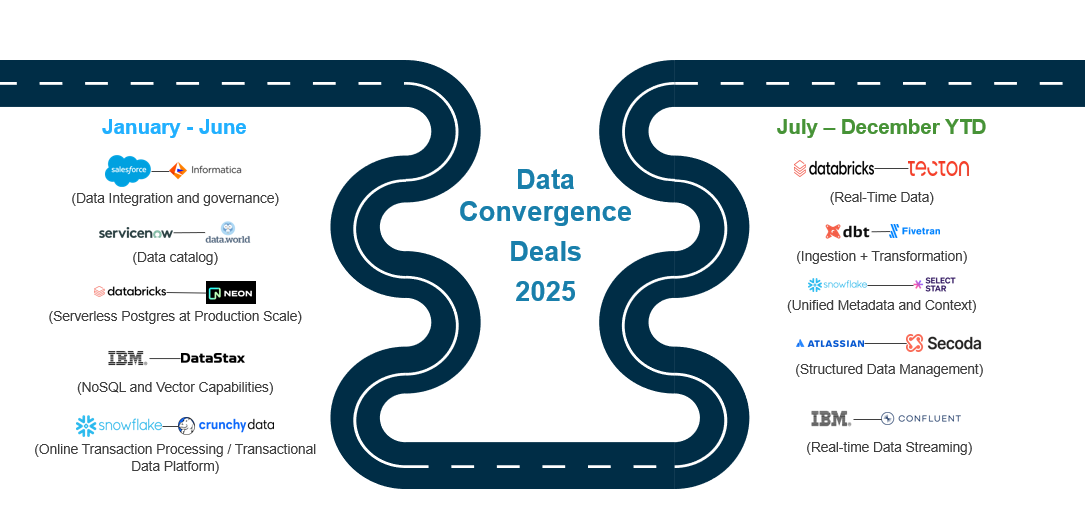

We are seeing accelerated convergence across the data stack, reflected in a series of strategic deals.

We have spent much of this year in conversation with stakeholders who see the same thing we do: the data stack is converging, fast. Everest Group has been discussing this shift for a while, spotlighting it in pieces such as “Salesforce Acquires Informatica: The Missing Link in Its AI Ambitions?” (Link), “Convergence of the Data Stack: What the dbt–Fivetran Merger Signals” (Link), and “Snowflake’s select star move: When data finally meets its context” (Link). We expect this momentum to accelerate as technology providers race to anchor themselves at the center of enterprise data workflows, and we will continue tracking every move.

Takeaway

AI, Generative AI (gen AI) and agentic systems have created a storm across the landscape, and as the ecosystem gains experience, it becomes clearer that everything rests on the data foundation beneath, fueling a wave of consolidation and convergence as providers rewrite their stacks. As AI pushes the boundaries of what data must do, acquisitions like this reflect how quickly the market is reshaping itself around that pressure. The edge now sits with those who can move data quickly and trust it end-to-end; everything else follows from there. The ecosystem players will have to decide whether they intend to own the data-AI spine by handling the data burden that AI creates or simply orbit those who do and risk being pushed to the edges of the stack.

Everest Group continues to track the data stack convergence era and how it influences the broader data and AI landscape.

If you found this blog interesting, check out, How IBM rewired itself – focusing on the IBM trends – Everest Group Research Portal, which delves deeper into another topic relating to IBM.

We would welcome your opinions on these movements and for any queries, please reach out to Mansi Gupta ([email protected]), Anju Kattakathu ([email protected]), and Snigdha Uppala ([email protected]).