Blog

Red teaming in AI: A trust and safety imperative

What happens when Artificial Intelligence (AI) models aren’t just wrong but unsafe?

As AI systems become embedded in digital services, platforms, and user-facing tools, ensuring they behave safely and predictably is no longer optional.

In this context, red teaming in AI has emerged as a key discipline within Trust and Safety (T&S). Inspired by adversarial testing practices from cybersecurity including blue, and purple teaming; red teaming in AI simulates real-world misuse, edge cases, and adversarial behavior. Red teaming in AI goes beyond functional testing to ask: What could go wrong, and how can we fix it before it happens?

Reach out to discuss this topic in depth.

How red teaming helps catch AI failures early

Red teaming in T&S focuses on how systems might fail in high-stakes, real-world scenarios. It explores not just technical flaws, but the ways people might intentionally or unintentionally exploit the system. Red teaming typically focuses on five key areas:

- Adversarial testing challenges the model with edge cases, offensive prompts, and risky scenarios to surface harmful outputs such as hate speech, self-harm, or misinformation.

- Ethical and bias probing evaluates fairness across identities, regions, and languages to detect harmful stereotyping, underrepresentation, or discrimination.

- Prompt engineering attacks simulate indirect manipulation through roleplay, ambiguity, or chained prompts techniques often used to bypass filters.

- Data poisoning simulations to introduce manipulated or unsafe data into fine-tuning or Retrieval-Augmented Generation (RAG) systems to see if outputs are skewed.

- Model extraction to test how much internal knowledge or sensitive data a model might leak through repeated or strategic querying.

Pillars of red teaming

Red teaming methods vary widely depending on the risks being assessed:

- Manual red teaming allows experts to simulate real abuse, including culturally sensitive or policy edge cases that require human nuance. For example, a red teamer may pose as a user testing moderation limit by using coded hate speech or regional slang to trigger borderline responses cases where automated filters often fall short

- Automated red teaming runs large volumes of scripted or AI-generated prompts to identify consistent vulnerabilities or policy violations at scale. While fast and repeatable, it often struggles to detect creative misuse, sarcasm, or subtle manipulation embedded in longer conversations

- Hybrid models combine the two, using automation for breadth and human insight for depth. This layered approach ensures wide testing coverage while still catching hard-to-spot edge cases

T&S teams also apply Reinforcement Learning from Human Feedback (RLHF) and Reinforcement Learning from AI Feedback (RLAIF) as part of their red teaming workflows. While manual and automated red teaming are essential for uncovering vulnerabilities such as harmful outputs, policy violations, or bias, RLHF and RLAIF are increasingly used to scale and systematize the response. RLHF enables red team findings to directly influence model behavior during training, using real human judgments to guide the model toward safer, policy-aligned responses. RLAIF complements this by using classifiers and automated signals to flag unsafe outputs during or after testing, allowing teams to monitor red teaming effectiveness continuously.

Together, these methods form a full-spectrum safety workflow one that doesn’t just test for failure but builds in better alignment before deployment.

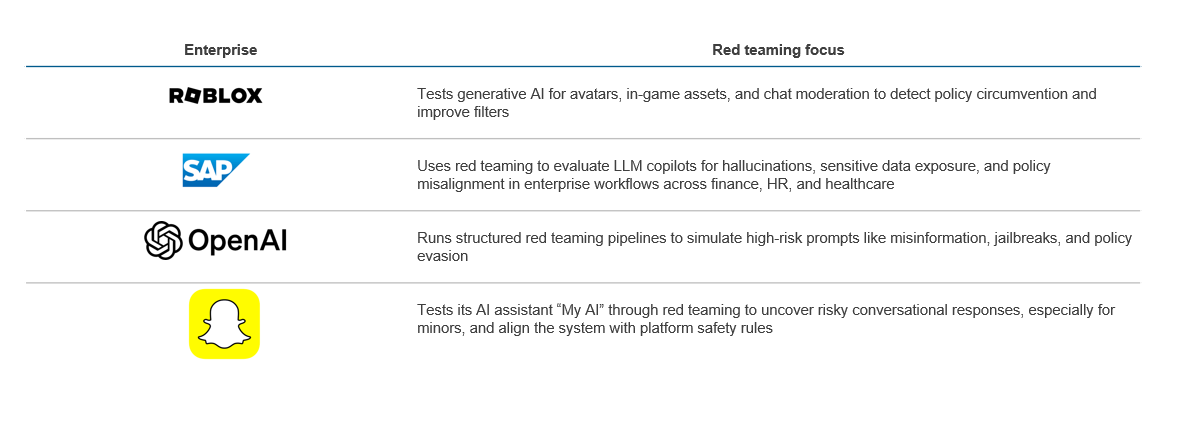

Many leading organizations are already using red teaming not just as a one-off risk assessment, but as a core component of their T&S strategy. Whether testing for policy violations, misuse scenarios, or emerging forms of AI abuse, red teaming is becoming deeply integrated into how enterprises design, test, and monitor AI systems. The table below highlights how different companies are applying red teaming to support safer and more trustworthy AI deployments.

These use cases show how red teaming is now a practical, operational tool not just for identifying technical vulnerabilities, but for maintaining platform integrity, enforcing policies, and protecting users at scale. What stands out across these examples is how red teaming is being woven into broader governance and safety frameworks. It is not just a testing checkpoint, but a continuous feedback loop that connects product design, content moderation, and compliance operations.

These use cases show how red teaming is now a practical, operational tool not just for identifying technical vulnerabilities, but for maintaining platform integrity, enforcing policies, and protecting users at scale. What stands out across these examples is how red teaming is being woven into broader governance and safety frameworks. It is not just a testing checkpoint, but a continuous feedback loop that connects product design, content moderation, and compliance operations.

Why red teaming must evolve: from breaking models to stress-testing systems

Traditional red teaming built around static prompt testing struggles to keep up with today’s rapidly evolving AI systems. Models are becoming more agentic, using tools, accessing data, and making decisions with minimal oversight. They’re also multimodal, processing not just text but images, audio, and other input types.

As AI takes on more complex roles, red teaming must evolve from point-in-time testing to continuous, system-level evaluation. It’s not just about what the model says anymore. It’s about what it does, how it behaves under pressure, and whether it can be misled or manipulated in dynamic environments.

For example, an agentic assistant that automatically reads user calendar invites and books travel. A red team might embed a malicious location string or conflicting metadata in an event testing whether the AI system interprets it safely or makes an unintended, costly booking. These are the types of edge cases that traditional testing would likely miss.

Key priorities now include:

- Agentic AI testing – Stress-testing how models use Application Programming Interfaces (APIs), plugins, and tools to complete tasks or take real-world actions

- Multimodal abuse simulation – Evaluating how combinations of text, images, or code might produce unsafe outputs that evade traditional filters

- Contextual resilience – Testing behavior across user types, time zones, languages, and regulatory conditions to ensure policy alignment under real-world pressure

The consequences of outdated red teaming are serious. AI systems that aren’t tested for tool misuse, autonomy failures, or learning drift may unintentionally escalate risk or even trigger harm in production. Evolving red teaming means embedding it not just in testing, but in governance, design, deployment, and continuous learning.

Final thoughts

Red teaming is no longer just a checkpoint before deployment; it’s the connective tissue between AI development and T&S outcomes. It brings product, policy, engineering, and risk teams together around a shared question: what could go wrong, and how can we catch it before it happens?

As AI capabilities expand, so do the risks. Without targeted, proactive testing, even well-designed systems can be manipulated, misused, or drift from policy expectations. Red teaming helps teams move beyond reactive safety practices toward continuous, real-world validation.

For enterprises deploying advanced AI, investing in red teaming means building a culture of proactive safety. It’s not about slowing progress, it’s about scaling safely: intelligently, intentionally, and with user trust at the center.

If you enjoyed this blog, check out, Trust and safety’s double squeeze: DSA oversight intensifies as U.S. tightens visa restrictions – Everest Group Research Portal, which delves deeper into another topic relating to T&S.

If you have any questions, are interested in exploring red teaming practices, their applications in T&S, governance frameworks for AI risk management, or broader strategies for evaluating and mitigating system vulnerabilities, feel free to contact Abhijnan Dasgupta ([email protected]), Dhruv Khosla ([email protected]), or Ish Sahni ([email protected]).