The Dark Side of the Moon: Generative AI Spurring Rising Trust and Safety Interventions | Blog

The rise of generative Artificial Intelligence (AI) has been nothing short of a technological revolution. From art to advertising, it has transformed content creation and consumption. As we dive deeper into generative AI, we can’t ignore the elephant in the room: How will it impact enterprise Trust and Safety (T&S) policies and operations? Read this latest blog in our series to learn about the impact of generative AI on T&S, potential challenges for enterprises, and recommendations to navigate T&S waters.

“In the age of generative AI, trust brokers will become increasingly necessary and increasingly valuable in the marketplace.” – Hendrith Vanlon Smith Jr., CEO of Mayflower-Plymouth

Generative AI is making headlines as organizations discover exciting breakthroughs by investing in developing their own models. These advanced models have demonstrated remarkable abilities to generate realistic and diverse outputs that can be useful in various applications such as content creation, language translation, image and video synthesis, and even drug discovery. However, valid potential ethical concerns arise around deepfakes, truthfulness (or lack thereof), biased output, plagiarism, and malware, among others. Let’s explore this further.

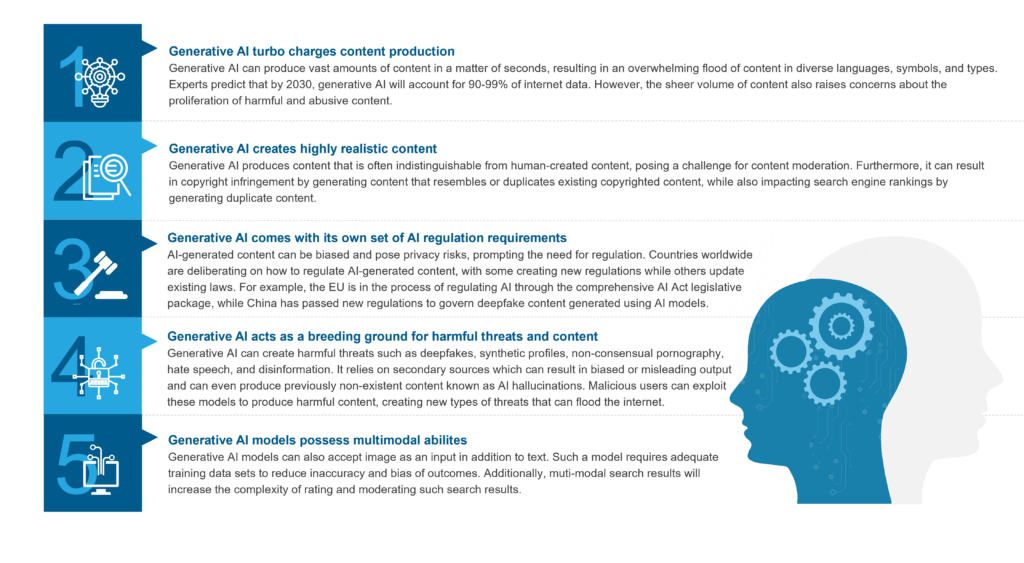

Generative AI is the future of content but introduces additional complexities for enterprises

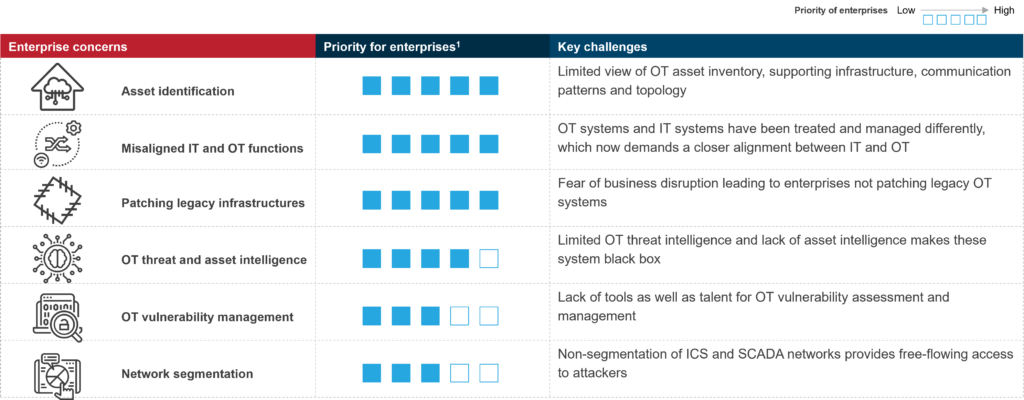

Generative AI is a type of artificial intelligence that can create new and diverse data forms, including images, audio, text, and videos. This technology has been around for some time, but recent advancements in models such as ChatGPT, DALL-E 2, Midjourney, and others have brought generative AI into the spotlight. While generative AI has led to an explosion in potential usability across diverse fields, it also presents additional complexities for enterprises to moderate it effectively, as illustrated below.

As generative AI constantly evolves with daily updates and new models contributing to its increasing complexity, heightened protection and care are necessary to avoid lasting negative consequences, such as reduced brand value and loss of customer trust. To ensure customer protection and platform safety, enterprises require dedicated T&S teams more than ever.

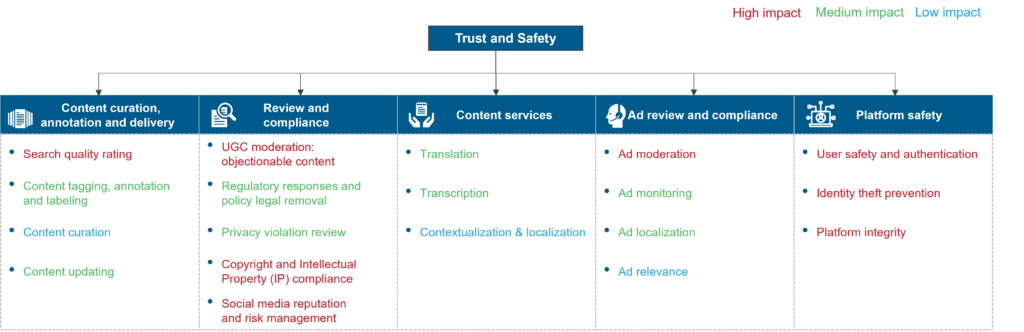

Generative AI impacts the entire T&S value chain to varying degrees

Below are some examples of emerging T&S use cases:

- Getty Images, a UK-based photo and art gallery, sued Stability AI in the UK and US for copying over 12 million photos without permission, alleging copyright infringement, trademark violation, and unfair competition

- Twitch, a streaming platform, temporarily banned the AI-generated sitcom “Nothing, Forever,” a parody of the popular TV show “Seinfeld,” due to the use of transphobic language in an episode

T&S needs to keep pace with generative AI

Current T&S operations are lagging behind the evolution in various content formats and need to step up their game to deal with the nuances of generative AI.

T&S services:

Effectively moderating generative AI content requires human moderators to possess a blend of technical expertise, ethical consciousness, and critical thinking abilities. A lack of these attributes can result in incorrectly flagging or removing content, as evidenced by the artist who was banned from a well-known art community by the moderator on accusations of using AI to create their artwork. Moderation of AI-generated content also can be limited by a lack of support for moderating niche languages.

Moreover, training the AI requires moderators to be exposed to more dangerous and harmful threats over longer periods, which could take a toll on their mental health and productivity. For example, a service provider hired by a tech-based company to train its GPT model terminated the contract due to prolonged exposure to harmful content.

T&S solutions:

Content moderation technology solutions have room to improve their maturity and ethical technology to effectively handle complex content formats such as generative AI content. The technology also needs to become more robust to moderate all types of generative AI content, including audio, video, and livestream. The shortage of training data leading to the lack of explainable AI and contextual understanding also poses a significant hurdle for content moderation that relies on large datasets.

Efforts to create effective AI-generated content detectors are underway, but their accuracy remains a challenge, with current capabilities falling short of achieving 95% reliability. In the race between generative AI creating harmful content and T&S AI moderating it, generative AI seems to be leading its T&S counterpart.

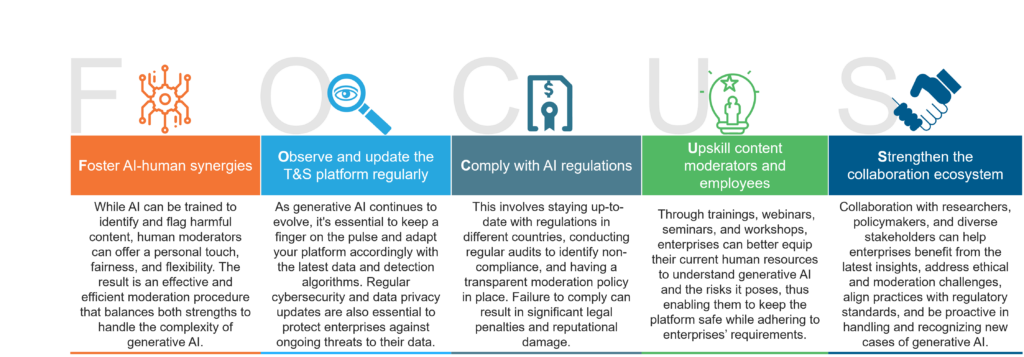

Enterprises need to amplify their FOCUS on T&S to deal with the nuances of generative AI

In this rapidly changing AI world, implementing the FOCUS framework illustrated below can enhance enterprises’ capabilities in handling generative AI complexities to safeguard users.

Strategic providers can help enterprises maintain the FOCUS framework in the following ways:

- Upgrade algorithms and training datasets: Partnerships can help maintain the robustness of enterprise T&S platforms by improving algorithm accuracy and including a wide and large variety of data sets to train the T&S technology

- Approach innovation collaboratively: Enterprises should look outward for innovation in addition to in-house investments. The right partner can help explore new technologies, such as explainable AI and responsible and ethical AI, to detect patterns and anomalies in generative AI content and assist enterprises in keeping their platforms secure

- Provide policy management support: Enterprises also require constant market monitoring in addition to collaboration with regulatory bodies and policymakers to identify triggers, which can potentially impact existing T&S policies

- Customize moderation rules: Operationally, generative AI requires customized moderation rules that are specifically geared to identify and highlight AI output that violates enterprise guidelines or other policies

- Deliver holistic platform-based T&S solution: Service providers are increasingly partnering with technology providers that offer comprehensive, scalable platforms integrating various content handling technologies and human capabilities into a unified system

Looking forward, T&S for generative AI puts forth many questions:

As generative AI evolves, the following questions need to be addressed before charting the right investment strategy for T&S:

- Can generative AI be used to build a T&S solution that replaces current AI models?

- Will data privacy regulations and the reluctance of peers to share proprietary data limit companies’ ability to collect and use data for generative AI training?

- Will content generated by AI be used to train even more AI models?

As we continue to make progress in the AI field, the possibilities for generative AI are endless, and imagining a future where AI-generated content becomes the norm is conceivable. However, it’s essential to approach this development cautiously and diligently, taking into account all the potential implications. As the future of generative AI unfolds, its success will depend on our ability to balance its power with our responsibility to use and moderate it wisely.

For our other recent blogs on how ChatGPT will impact various industry sectors, see Impact of ChatGPT and Similar Generative AI Solutions on the Talent Market, Can BFSI Benefit from an Intelligent Conversation Friend in the Long Term, and ChatGPT Trends – A Bot’s Perspective on How the Promising Technology will Impact BPS.

To discuss how generative AI will impact the T&S process in detail, please reach out to Abhijnan Dasgupta and Shubhali Jain.

Discover more about generative AI in our LinkedIn Live, Generative AI and ChatGPT: Separating Fact from Fiction.