Impact of ChatGPT and Similar Generative AI Solutions on the Talent Market | Blog

ChatGPT’s arrival has brought much hype and speculation that it could replace several human workforce areas. While ChatGPT shows great early potential, how will it impact the “future of work” and the overall talent landscape? Read the latest blog in our series to learn more about the impact of ChatGPT and other generative Artificial Intelligence (AI) solutions on the workforce.

Since its advent, ChatGPT has taken the internet by storm, reaching a million users in under a week. No wonder it is the most talked about subject in technology and innovation. While ChatGPT has generated a lot of curiosity among netizens, the big techs are not far from the spotlight.

Microsoft has already invested billions in the technology and even integrated it into its search engine Bing. Google has officially announced “Bard,” its ChatGPT rival based on an in-house language model that is undergoing testing before being released to the public.

Chinese search engine Baidu has announced the testing of a similar tool, “Ernie Bot,” while Alibaba also confirmed working on an AI tool. Worldwide, we are witnessing rapid innovation and updates in this field, and by the time you read this blog, we might expect some more new developments.

What does it mean for the talent and workforce industry?

While the utility of a generative AI like ChatGPT remains an area to explore, we expect HR and business leaders to leverage ChatGPT across various dimensions of work and talent management. The workforce industry has evolved over the past few decades, and with the advent of machine learning and AI, we can expect to see some major transformations in the coming few years.

While ChatGPT has the potential to impact talent management, it is still not a replacement for human recruiters. Instead, it can assist them by streamlining the process and making it cost-effective and efficient by automating routine tasks, improving the candidate experience, and enhancing the recruitment process.

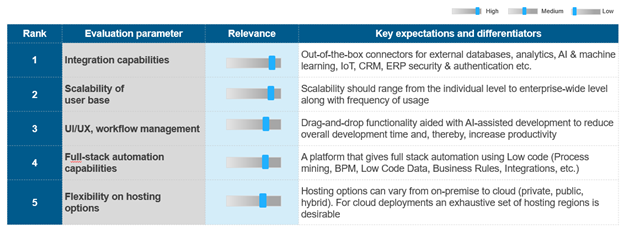

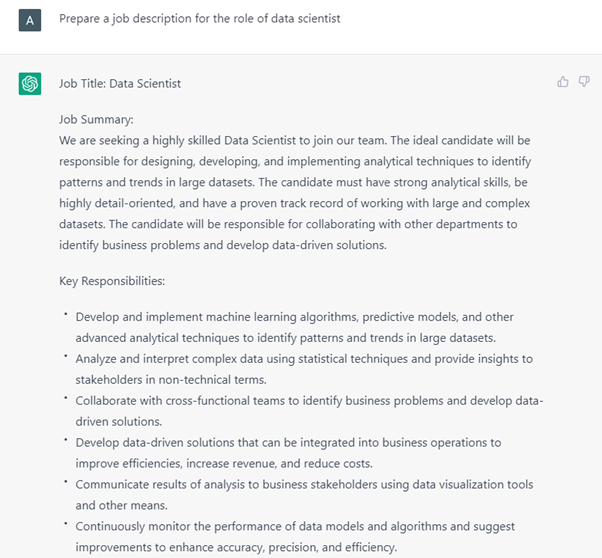

Some functions like job screening, content development, and job pricing will see a greater impact than other roles, as illustrated below:

Current mapping of ChatGPT and similar AI across the talent management value chain

Where can ChatGPT replace human involvement in the near and long term?

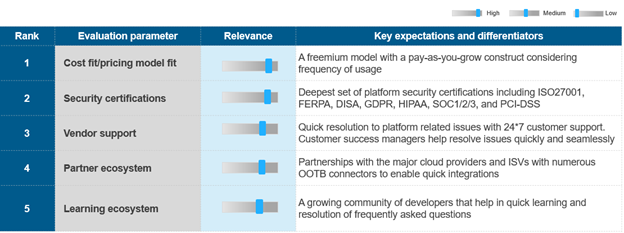

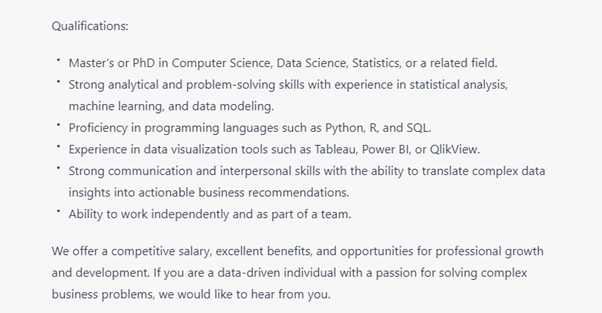

ChatGPT has already proven its capability to solve math, write code and content, create poetry and literature, converse with other AI tools, and assist with business problems. Soon, generative AI tools have the potential to replace most non-automated tasks such as targeting prospects, writing sales pitches, drafting reports, writing basic code, developing financial models, analyzing data, assessing candidates, optimizing operations, etc. Although the list has no definite bounds, the possibility exists for a single generative AI replacing jobs across multiple domains such as marketing, sales, finance, operations, etc.

The potential impact of ChatGPT and similar AI across workforce areas

Key examples of generative AI adoption

Here are some of the applications for these tools in the industry:

- Content creators at leading cloud services company VMware use the AI-based content creation toolJasper to generate original content for marketing – from email to product campaigns on social media

- Morgan Stanley is working with OpenAI’s ChatGPT to fine-tune its training content on wealth management. Financial advisors are using it to search for existing content within the firm and design tailored content for its clients

- Codeword, a leading tech marketing agency, has already hired the world’s first AI interns as an experiment to assist them with content writing, design, animation, and marketing

On similar themes, we have seen companies leveraging AI, such as Tesla building driverless cars and McDonald’s experimenting with employee-less eateries. In a few years, AI bots could replace various roles, such as customer service executives, recruiters, content writers, and even coders.

We might expect to see a single generative AI tool functioning across multiple domains (finance, HR, marketing, customer service, operations, etc.) within an organization, reducing the need for human intervention.

Blue-collar jobs were already at risk, and the success of ChatGPT further threatens several white-collar professions as well. In the long run, ChatGPT and similar AI tools can open doors to many new opportunities for AI integration, and any prediction we make has a higher risk of falling short of reality.

What challenges are associated with ChatGPT adoption?

We have already discussed the technical challenges of ChatGPT in our earlier blogs (see links at the end of this post.) Human interaction and empathetic judgment are the two major challenges for any AI tool to penetrate the talent management space. Also, limited capabilities in languages other than English and text-driven communication style restrict the use cases of generative AI in non-English speaking regions. Ethical and legal concerns also need to be addressed as the distinction between AI-generated and human-generated data blurs.

In addition, most short-term use cases of generative AI, such as chatbots, already have an alternative available in the market. It will take time for ChatGPT to further integrate into the talent market and move from an experimental basis to organization-wide implementation. Integrating a new system also requires additional investments and training that organizations need to explore.

Impact of ChatGPT on the future workforce

Amid all the hype and speculation, one thing is for sure: AI is here to stay. As humans, we need to embrace it and learn to co-exist with it. With the rise in AI adoption, the talent dynamics also are expected to change, and certain skills/roles associated with it will soar as we enter the age of AI.

Going ahead, we can expect to see higher demand for relevant technical skills. This also creates opportunities for several related skills, such as people with specific domain knowledge to train models and personnel, review content, ensure data reliability, and integrate systems based on industry needs.

Follow our next blog in the series to learn more about the type of skills/roles that will be affected and the new roles that will emerge in demand.

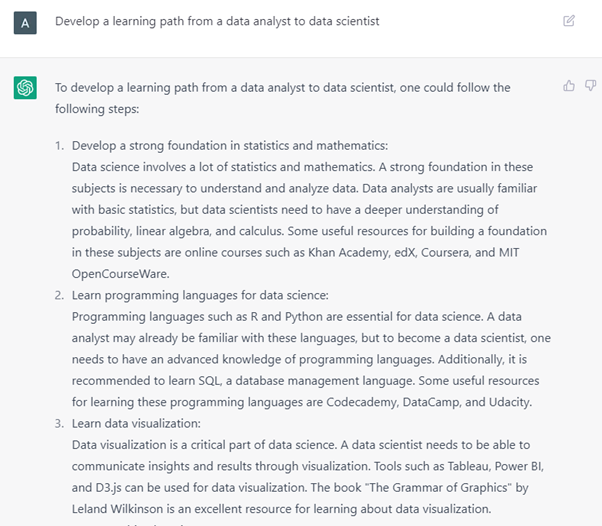

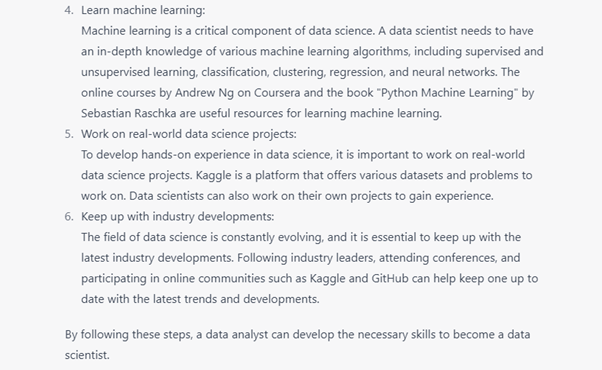

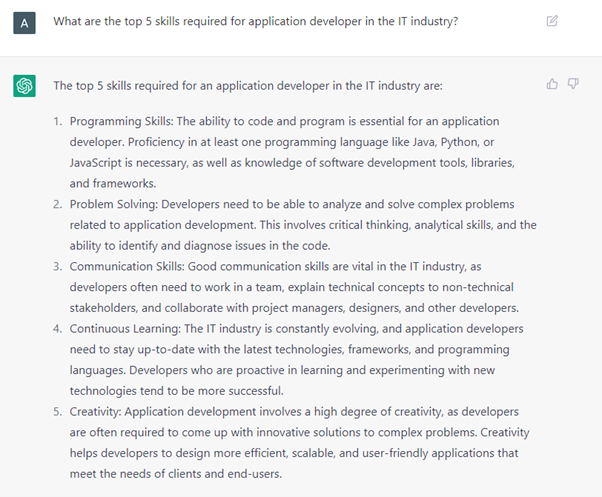

Below are some illustrations of the current capabilities and limitations of ChatGPT on talent-related queries. (The screenshots were taken on February 20, 2023, from India and the responses might be different for other users.)

For our previous blogs on this topic, see ChatGPT – Can BFSI Benefit from an Intelligent Conversation Friend in the Long Term?, ChatGPT Trends – A Bot’s Perspective on How the Promising Technology will Impact BPS and ChatGPT – A New Dawn in the Application Development Process?

If you have questions about the latest trends in the talent landscape or would like to discuss developments in this space, reach out to [email protected] or [email protected].

You can also watch our webinar, Top Emerging Technology Trends: What Sourcing Needs to Know in 2023, to learn more about how organizations can implement new technologies into processes and operations.