Reimagining Global Services: How to get MORE out of Technology | Sherpas in Blue Shirts

Much has been written and said about the Bimodal IT model Gartner introduced in 2014 – with forceful arguments for and against. Not at all intending to bash that model, it’s safe to say that the digital explosion over the last three years demands that enterprises’ technology strategies be much more nuanced and dynamic.

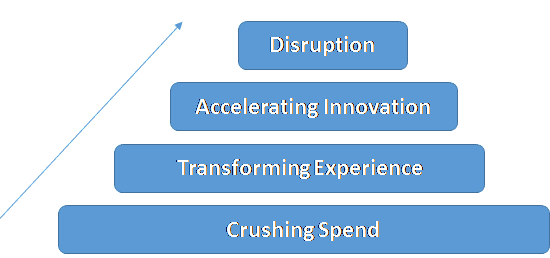

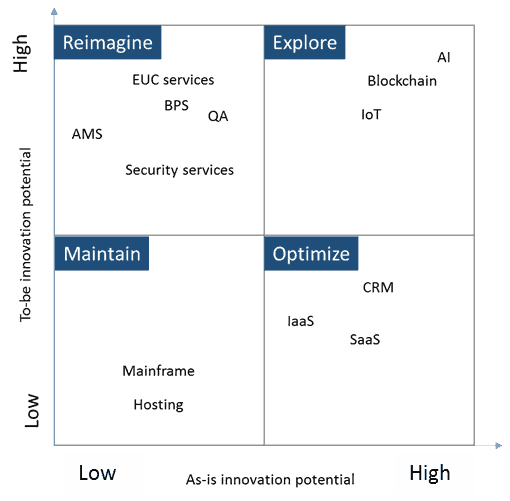

The MORE model for global services

Let me explain with the help of the following chart. I call it the Maintain-Optimize-Reimagine-Explore – the MORE – model.

I’ve tried to plot (intuitively) a bunch of technology and service themes on their current and future innovation potential.

- Maintain: On the bottom right are themes like mainframes and traditional hosting services that are unlikely to go through dramatic changes in the near term. These are exceptionally stable and commoditized, and will not attract exciting investments. Enterprises still need them, and CIOs should Maintain status quo because it’s too risky and/or expensive to modernize them.

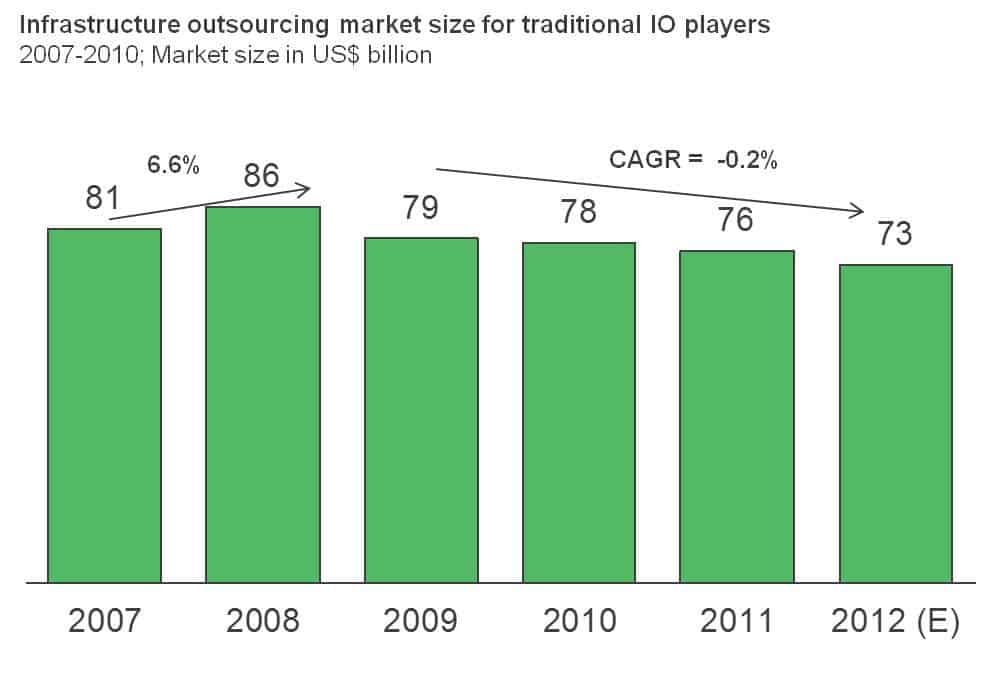

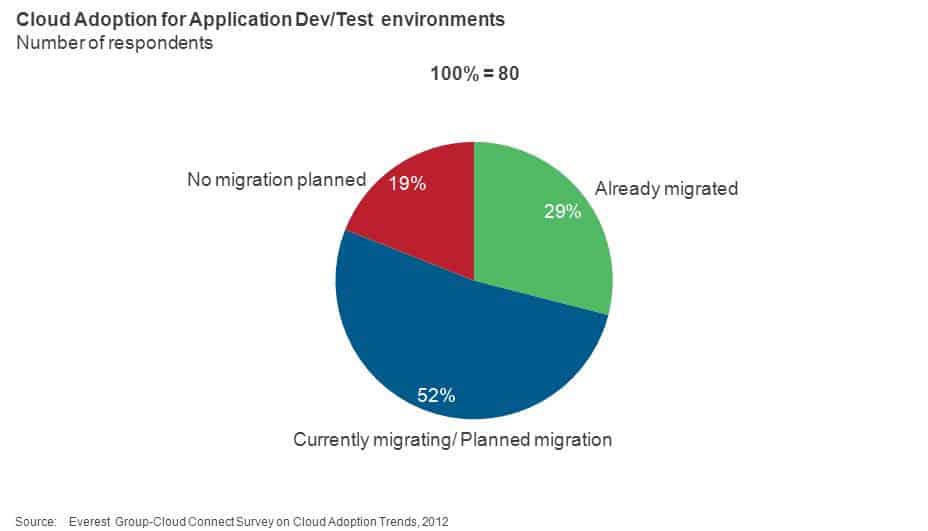

- Optimize: Seven years back, that cool AWS deployment was the craziest, riskiest, hippest tech thing we could do. But, I guess we’ve all aged (just a little bit) since then. The needle of cloud investment for most enterprises has moved from AWS migration (USD$200 per application, anyone?) to effective orchestration and management – a clear case of the enterprise seeking to Optimize its investments in the bottom right corner of my diagram.

- Explore: On the top right, we have the new wild, wild, west of the tech world. Blockchain can completely transform how the world fundamentally conducts commerce, IoT is working up steam, and artificial intelligence can shape a different version of human existence, much less business models. Enterprises need to Explore these to stay relevant in the future.

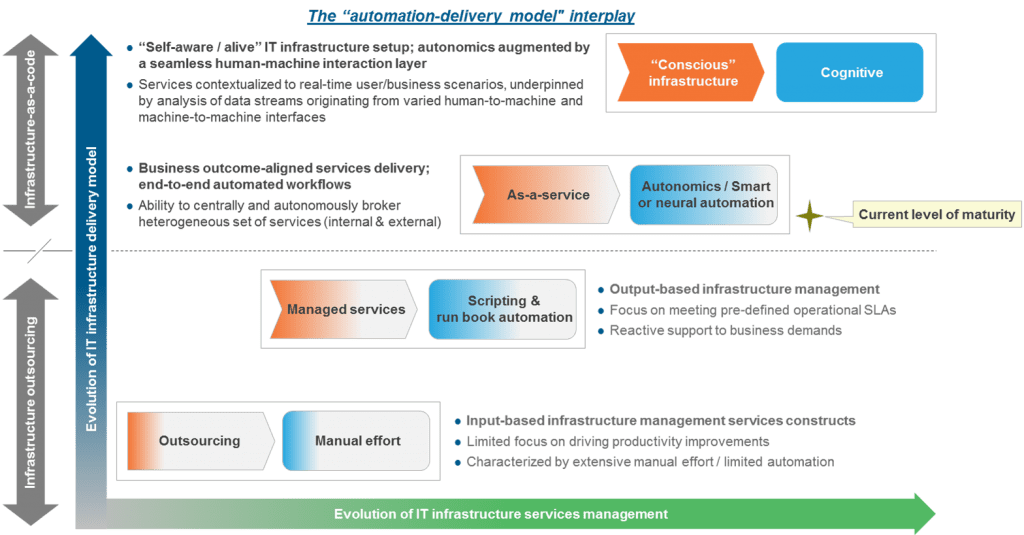

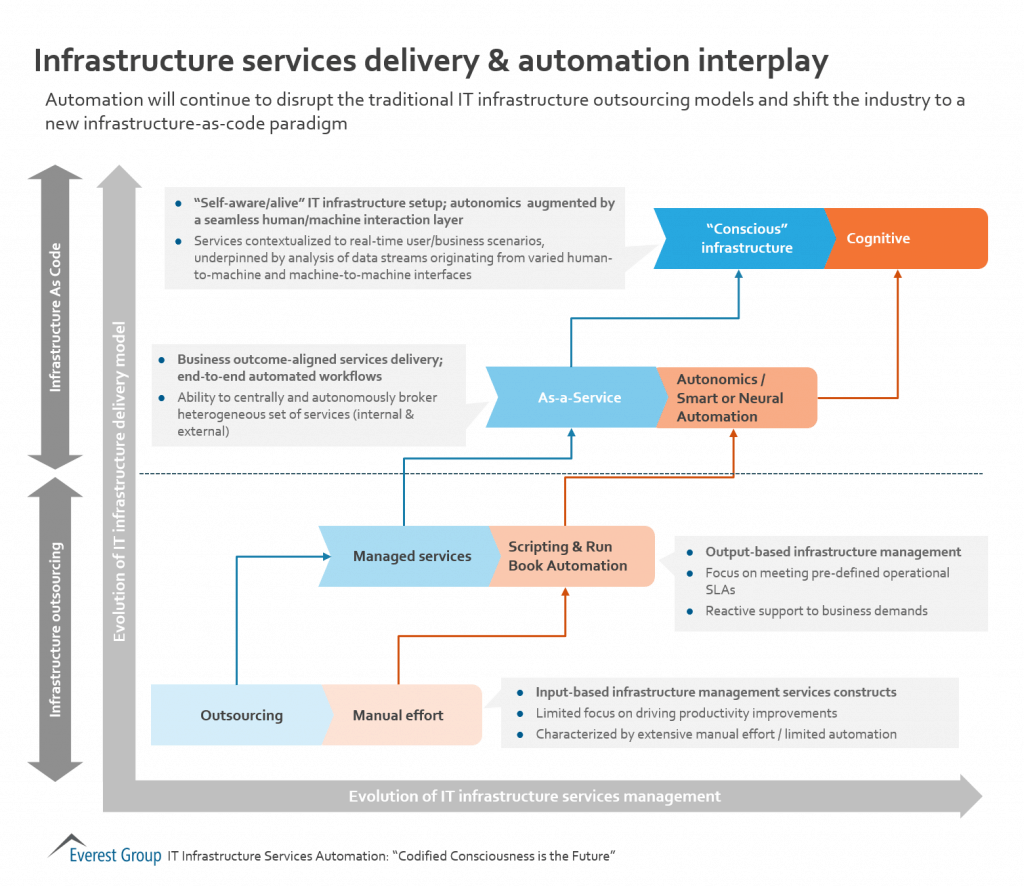

- Reimagine: What we cannot afford to miss out on is the exciting opportunity to Reimagine “traditional” global services into leaner and more effective models using a combination of enabling themes like automation, DevOps, and analytics. These are immediate opportunities that many enterprises consider essential to running effective operations in a traditional AND a digital world. For example:

- In a world where “the app is the business,” QA is being reimagined as an ecosystem-driven, as-a-service play built on extensive automation and process platforms. The reimagined QA assures a digital business process and a digital experience – not just an app.

- We are getting into the third generation of workplace services (first hardware-centric, then operations-centric, and now software and experience-centric.) The reimagined workplace service model delivers a highly contextual, user-aware experience, without sacrificing the long-range efficiency benefits.

- Application management services (AMS) are being reimagined through extensive outcome modeling, automation instrumentation, and continuous monitoring.

Three principles for reimagining global services

It’s interesting to note that many of these reimagination exercises are based on three common foundational principles:

- Automation first: Automation and intelligence lie at the heart of our ability to reimagine technology services, because automation helps us deliver breakthrough outcomes without blowing the cost model out of the water.

- Speed first: The need to run ALL of IT at speed is driving reimagination and the corresponding investments. If you’re at the reimagination table, throw away your tools to build the perfect (and the biggest) mousetrap. A big part of the drive for reimagination is to move from scale-driven arbitrage first models to speed-driven digital first models.

- Alignment always: This is important and good news. For decades, we’ve all complained about the absence of Business IT alignment. Reimagination hits out at this issue by focusing on technology architecture that is open and scalable, and by delivering as-a-service consumption models that are closely linked to things that the business really cares about.

Over the next several months, Everest Group is going to publish viewpoints on each of these topics and more. But we’d love to hear any comments and questions you have right now. Please share with us and our readers!